Navigating the Face Detection Maze

Oct 24, 2023

Oct 24, 2023

Oct 24, 2023

The first week of our adventure was nothing short of exhilarating. We dove deep into the sea of research papers, each one offering a unique perspective on face recognition. Here's a glimpse of our voyage:

Navigating the Research Seas

We embarked on a quest to understand the landscape of face recognition technology. We delved into 7-8 research papers, each using different pre-trained models and promising varying degrees of accuracy. Our mission was to find the perfect combination for our app.

The Data Dilemma 🤯

Availability of quality data is the lifeblood of any machine learning project. During our journey, we encountered a bottleneck: obtaining sufficient photos for training and testing. We had to request several classroom photos from our client to test effectiveness of models. We also had to weigh the trade-off between model speed and accuracy.

Comparing Face Detection Models 🔍

We also prepared a presentation comparing the effectiveness of different face detection models. You can access the slides and our conclusion 📑 here.

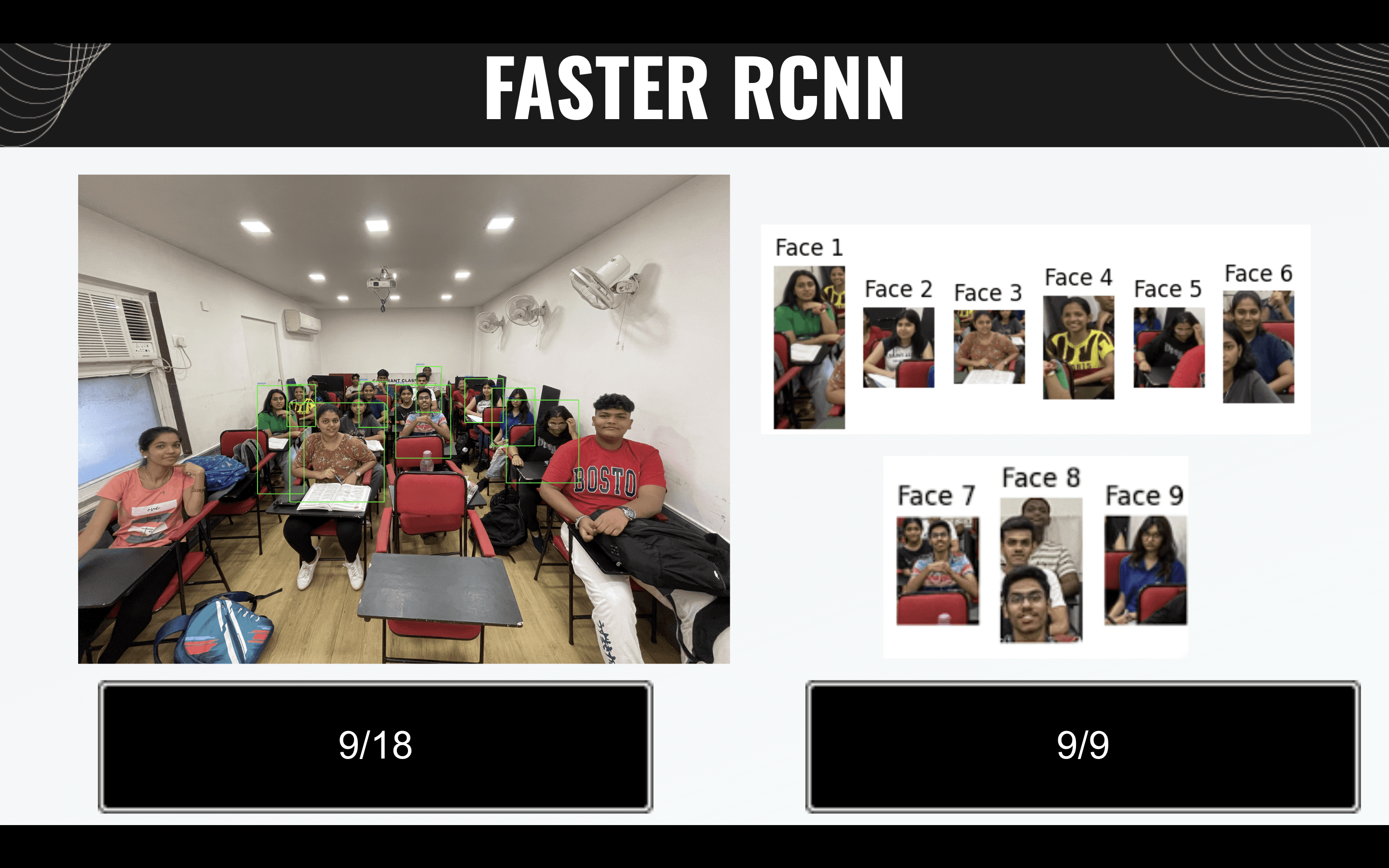

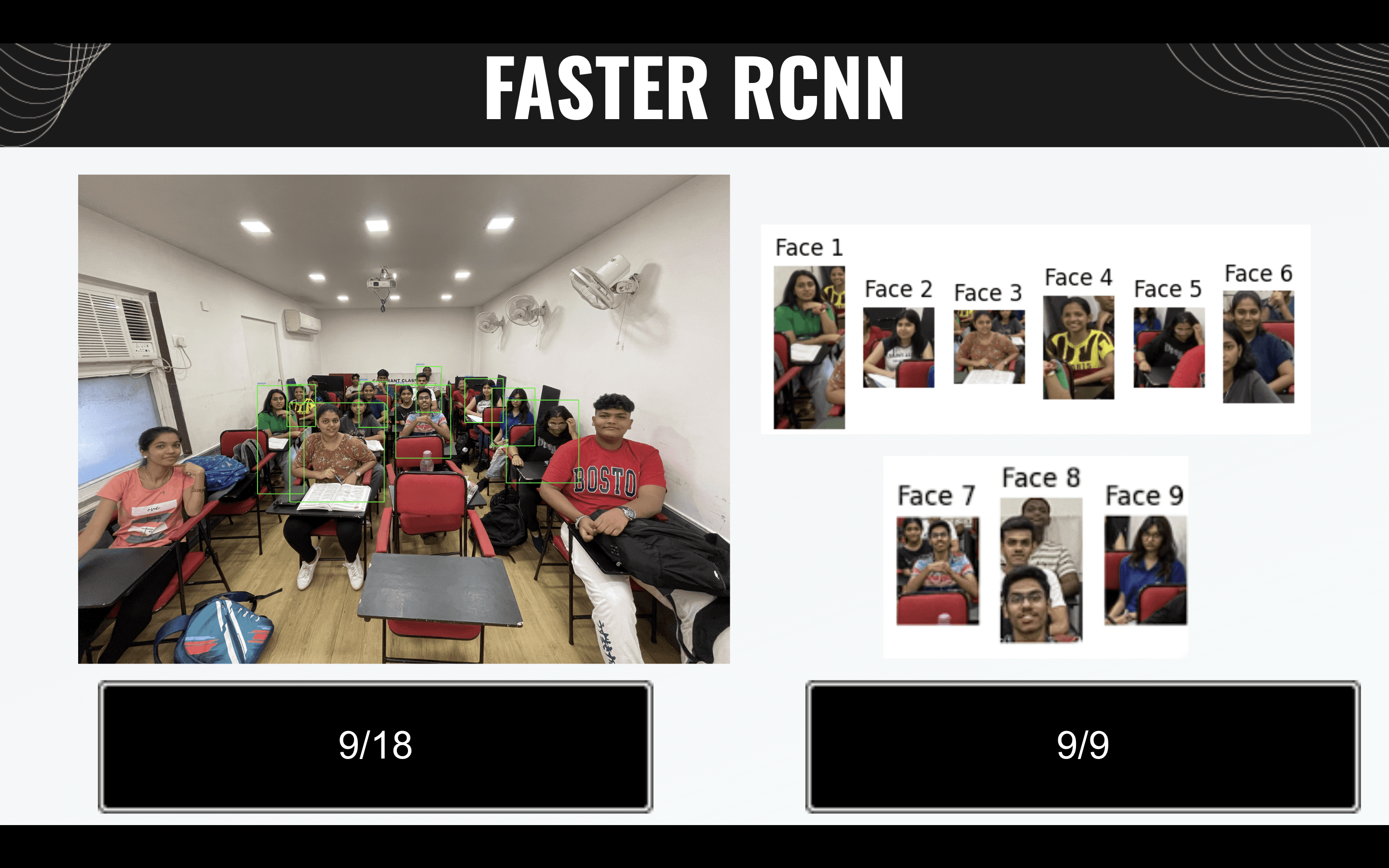

FASTER R-CNN

Speedy but Less Accurate: The Faster RCNN model is known for its remarkable processing speed, making it one of the fastest models available. However, it suffers from a significant limitation in terms of accuracy when it comes to detecting students. Out of a total of 18 students, only 9 are successfully detected by the model. This means that approximately half of the students are missed or not identified by the model, resulting in a detection rate of around 50%. This lower accuracy can be a critical issue, particularly in applications like attendance tracking or security systems, where a high level of precision in student detection is essential to avoid missing individuals and ensuring the reliability of the system.

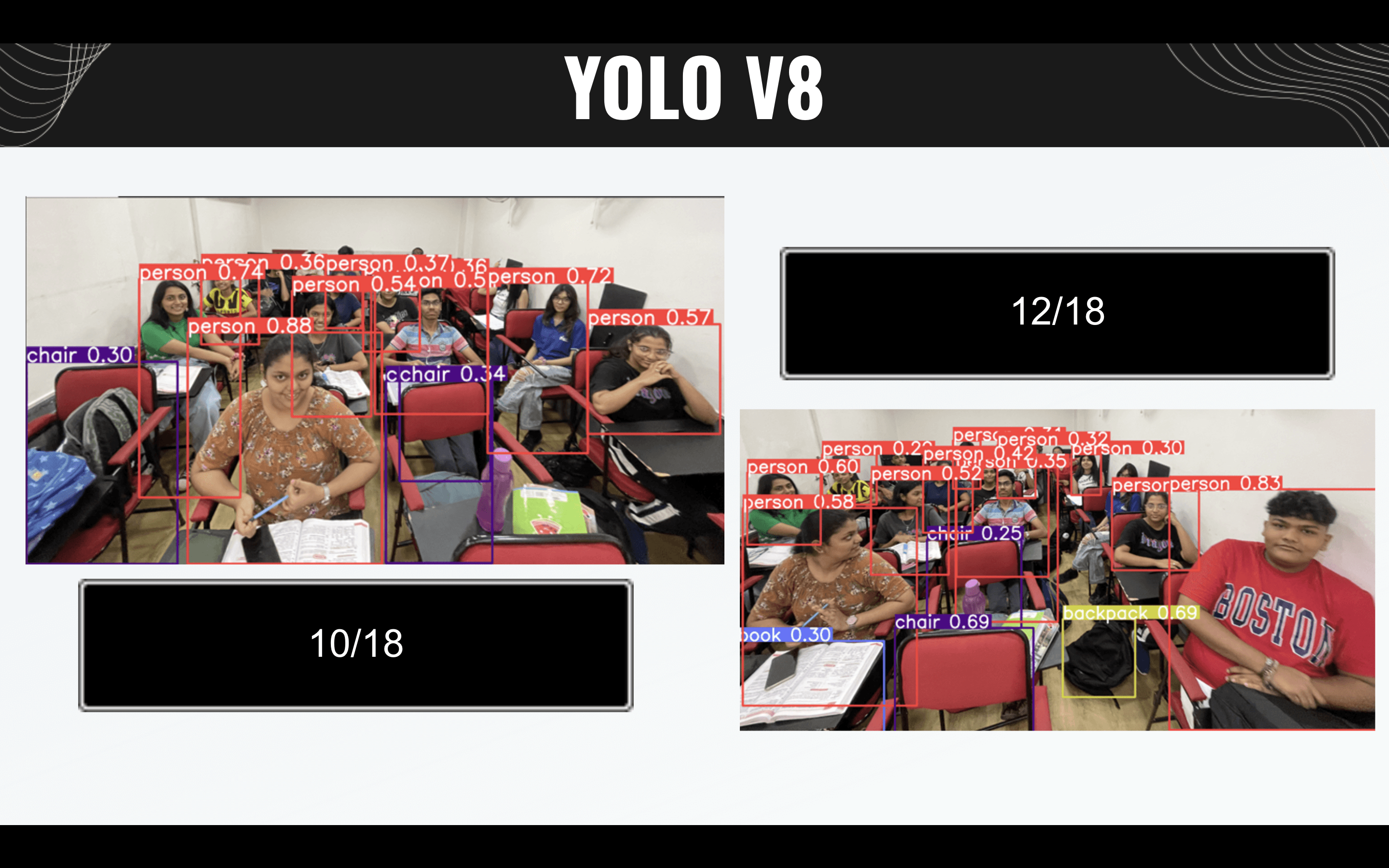

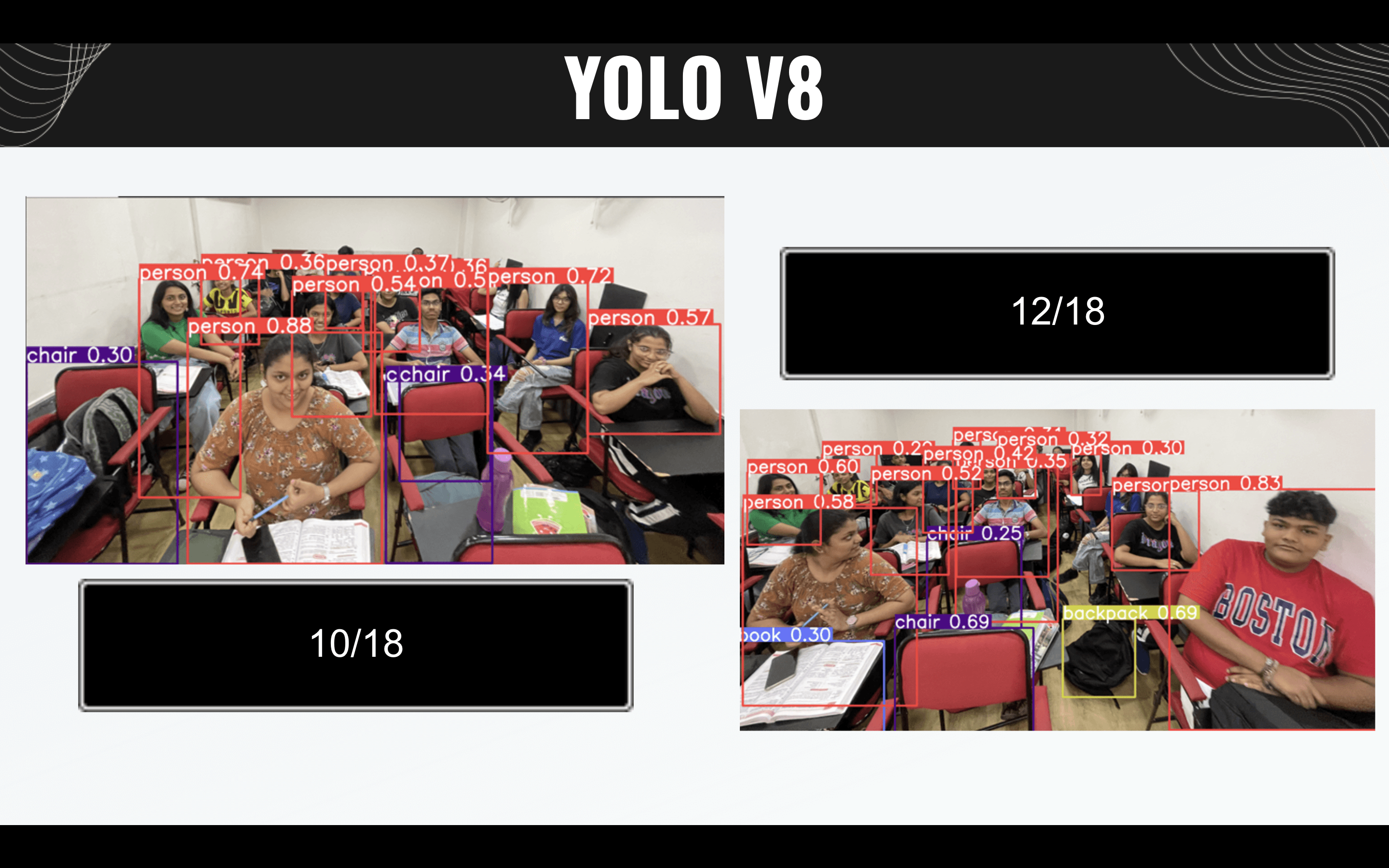

YOLO-V8

Versatile and Angle-aware: The YOLO v8 model, while slower than the Faster RCNN, offers enhanced customization options for optimizing its output, making it a versatile choice for various applications. It also exhibits angle-dependent capabilities, which can be valuable for tasks requiring precise understanding of object orientation or spatial relationships. In terms of face detection, the YOLO v8 model performs better than the Faster RCNN, successfully identifying 10 out of 18 faces. This improved detection rate positions it as a more effective choice for scenarios where accurate face detection is crucial, even though it may come at the cost of slightly slower processing speed compared to the Faster RCNN.

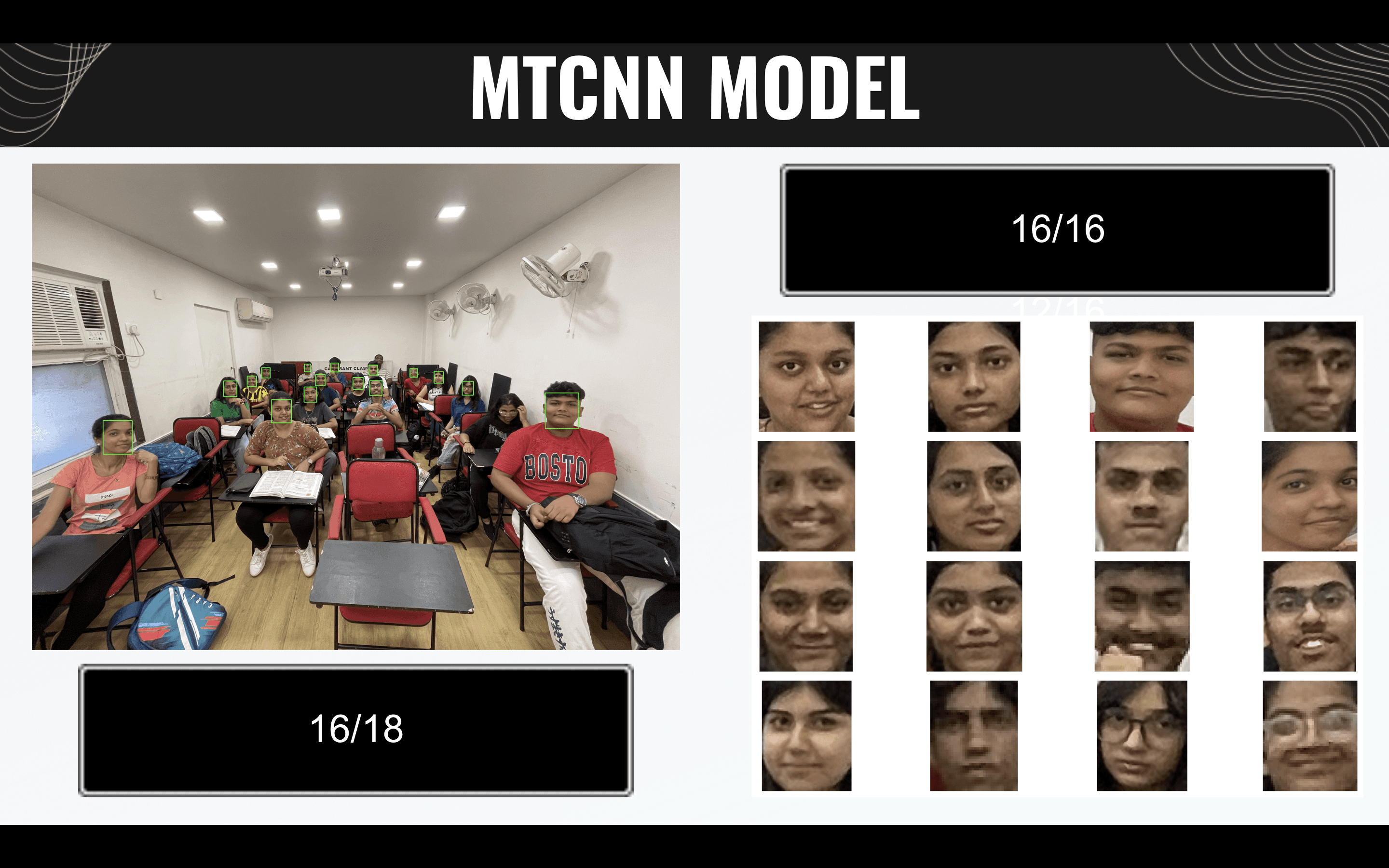

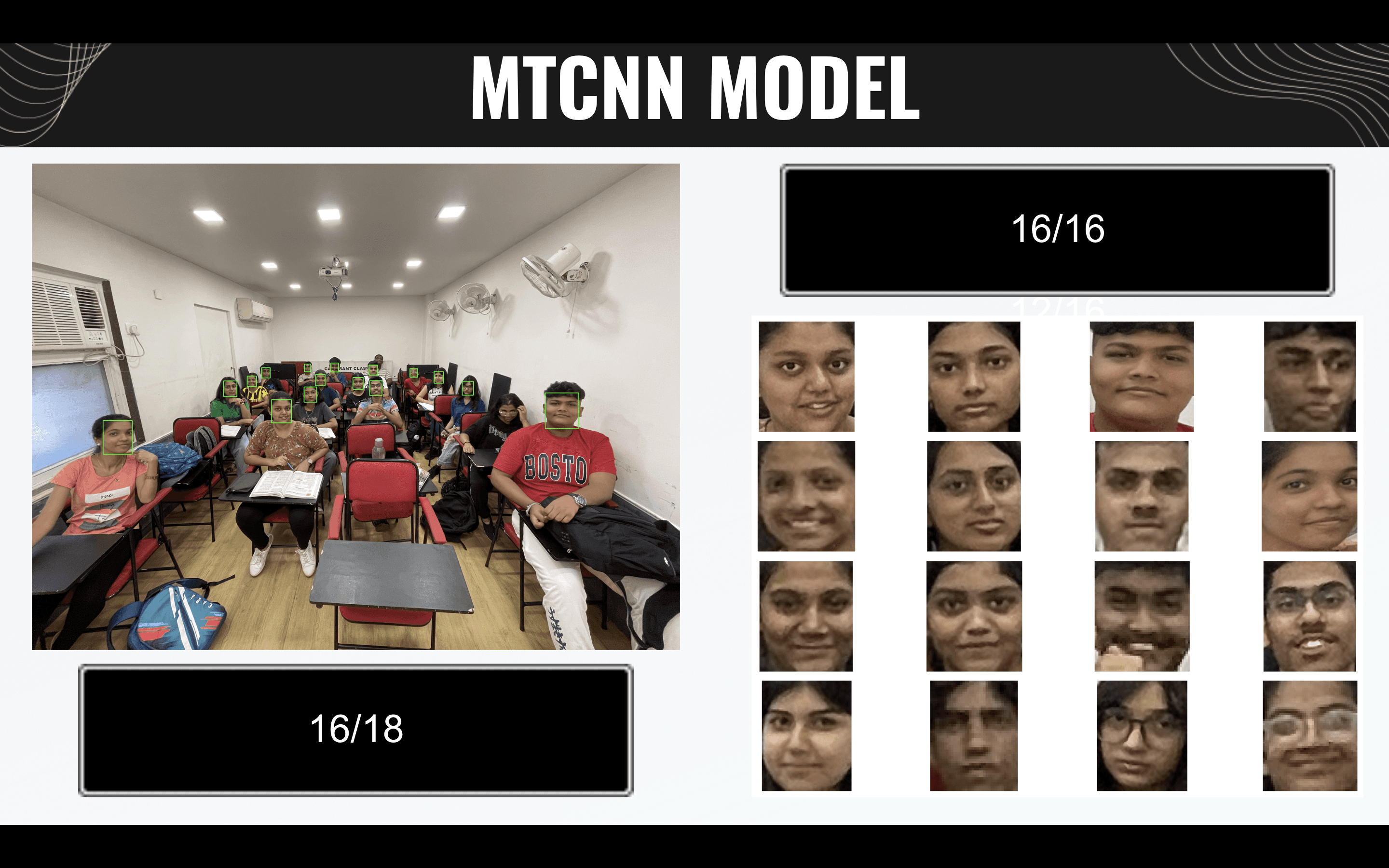

MTCNN

Versatile and Accurate: MTCNN's versatility in handling faces at different scales and orientations indeed makes it a valuable choice for various face detection applications. Its widespread use in facial recognition systems, video surveillance, and computer vision tasks highlights its effectiveness in scenarios where precise face detection is crucial.

The fact that MTCNN successfully identified 16 out of 18 faces, outperforming YOLOv8 in our specific case, underscores its reliability and accuracy in detecting faces. It's important to note that the performance of different face detection models can vary depending on the dataset, application, and specific requirements, so choosing the right model for the task at hand is essential. In your case, MTCNN has proven to be a strong performer for face detection.

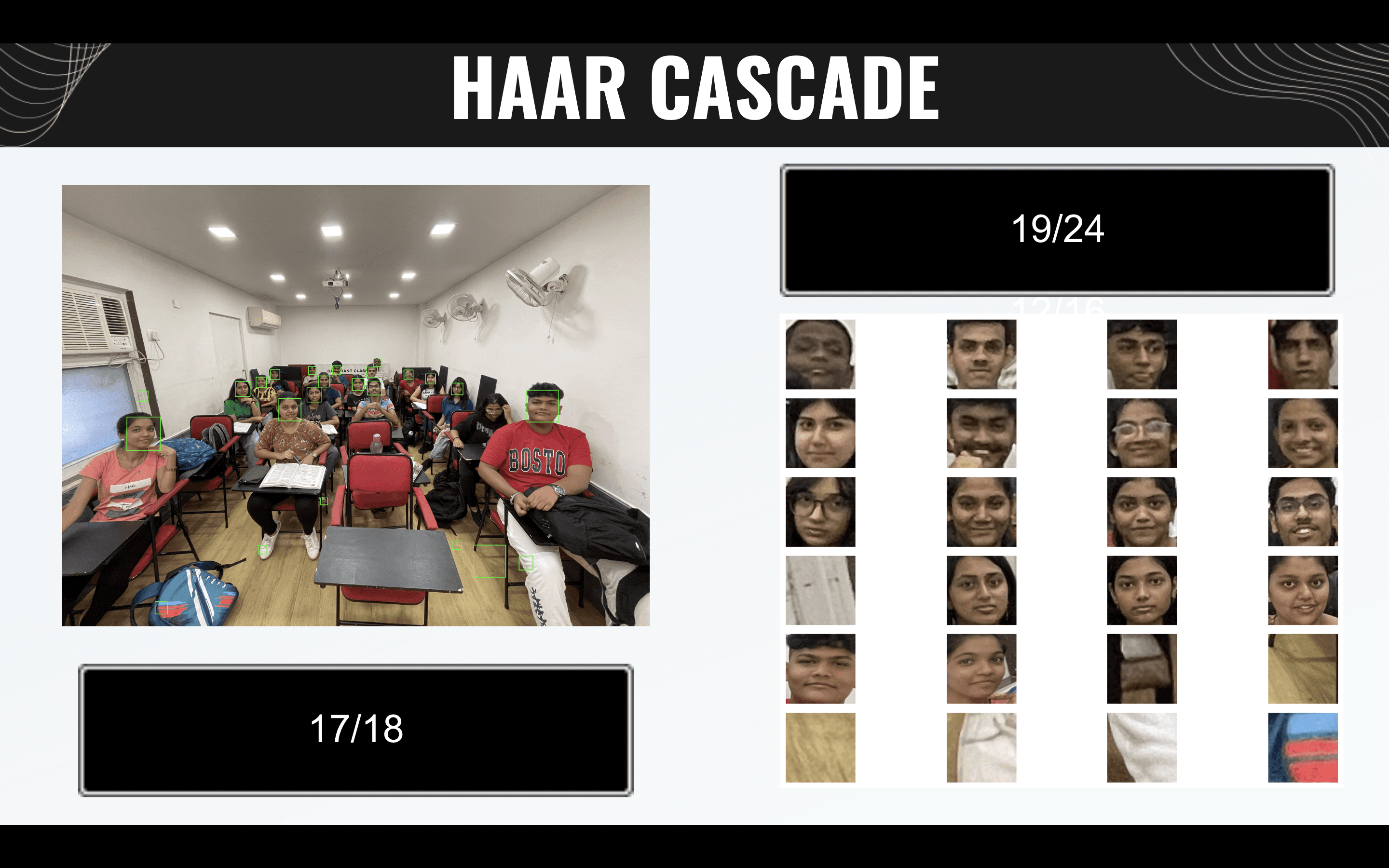

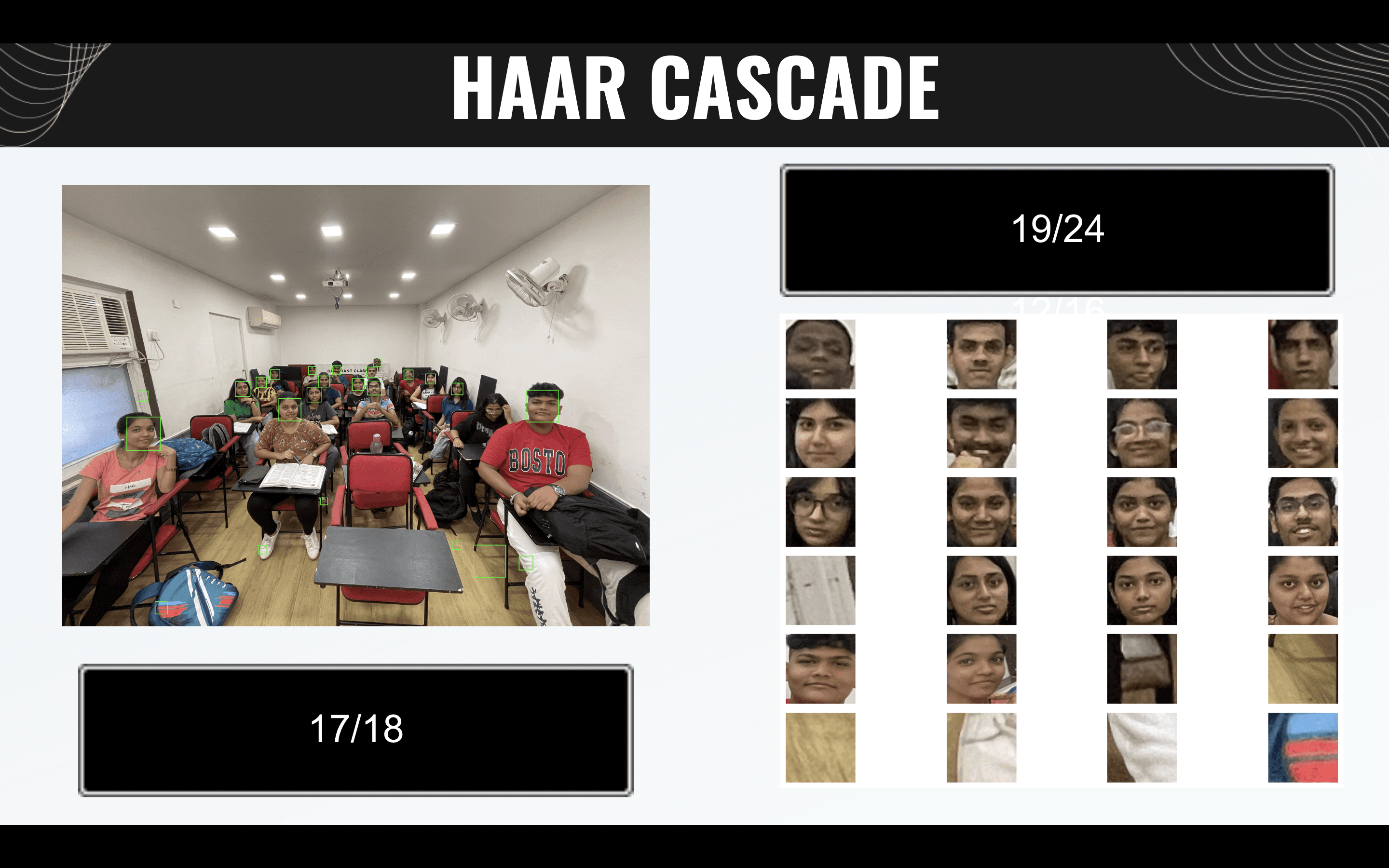

HAAR CASCADE

Efficient but Limited: The Haar Cascade classifier's effectiveness in object detection, including face recognition, stems from its training on datasets with positive and negative examples to learn distinguishing patterns. It is computationally efficient, making it suitable for real-time applications like face recognition, eye detection, and pedestrian detection.

However, it's important to note that Haar Cascades have limitations. While they perform well in many cases, they can struggle with certain edge cases, such as detecting faces when a person is facing downwards. Tackling these specific scenarios may require further testing and fine-tuning of the classifier.

One common drawback of Haar Cascades is that they can produce false positives, meaning they may detect objects or patterns that are not of interest. This can happen when the learned patterns in the classifier resemble features found in unrelated objects or backgrounds. Careful parameter tuning and post-processing techniques are often necessary to reduce false positives. It has successfully detected 17 faces out of 18.

In summary, Haar Cascade offers computational efficiency and can be effective in object detection tasks, but it may require additional adjustments to handle specific edge cases and minimize false detections, especially in scenarios where precise object recognition is essential, but it results in better accuracy than MTCNN model.

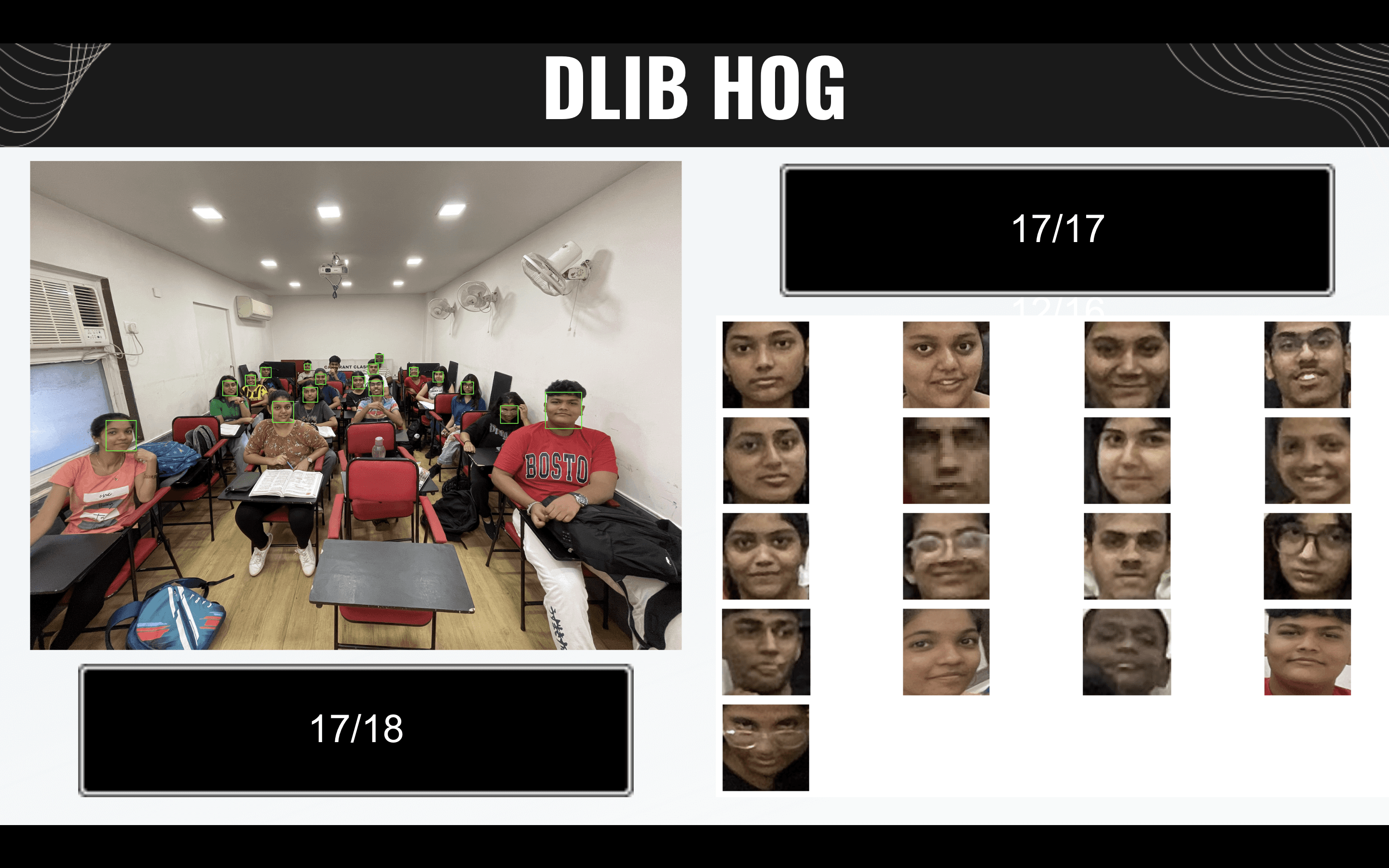

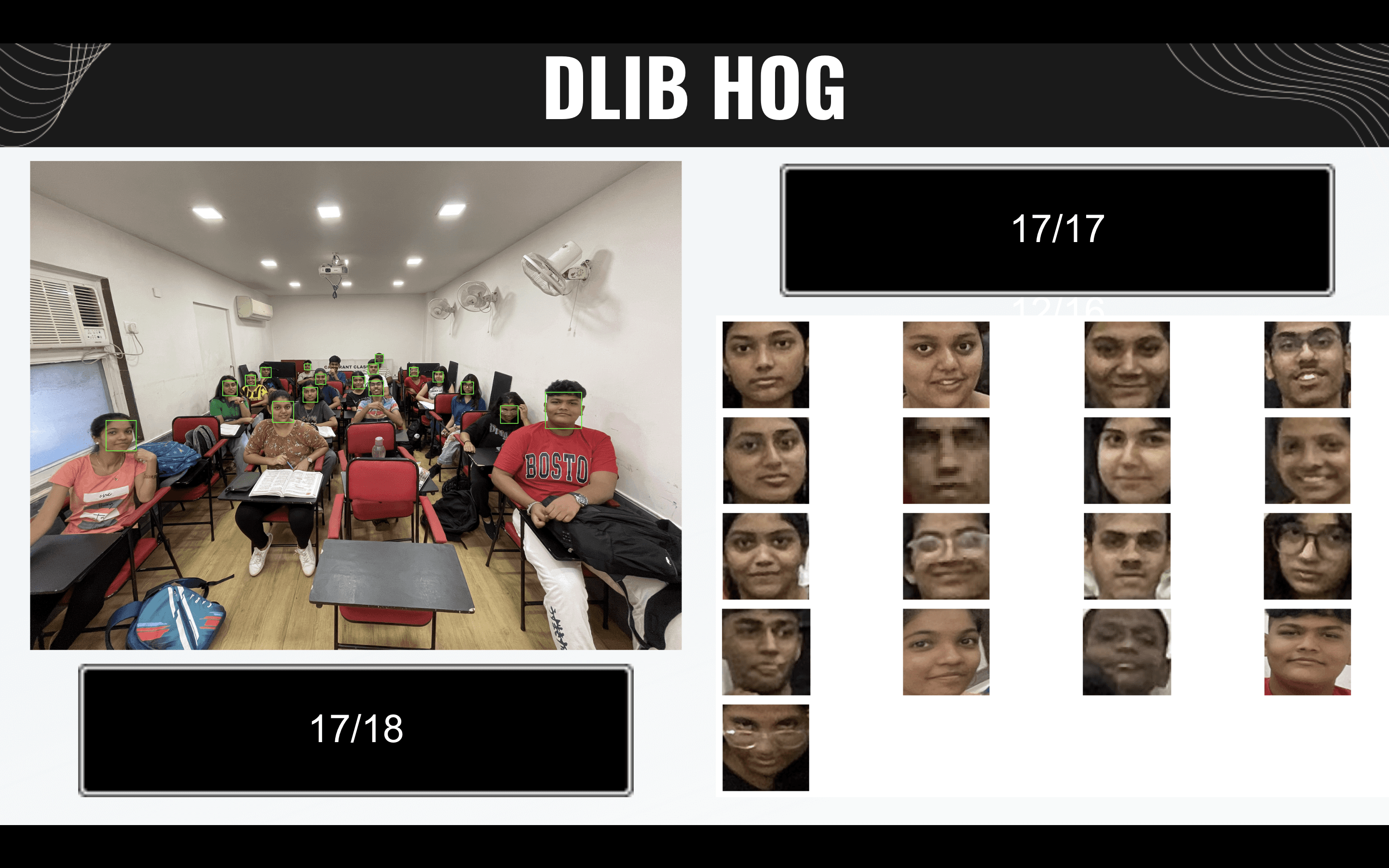

DLIB HOG

Effective and Reliable: Dlib's use of the HOG descriptor is highly effective for detecting faces by learning facial patterns from a training dataset.

Moreover, the versatility of HOG extends beyond faces; it can be applied to various object detection tasks with suitable training data. HOG is celebrated for its simplicity, efficiency, and robustness, making it a valuable asset in computer vision applications.

For our application, the HOG-based model excels by successfully detecting 17 out of 18 individuals, demonstrating its accuracy and reliability. While some fine-tuning and testing are required for addressing edge cases, it stands out for its ability to avoid false identifications, ensuring precise object recognition.

SUMMARY:

To conclude, it appears that dlib, Haar Cascade, and MTCNN have performed well in our specific application for object detection, particularly for tasks like face recognition.

Here's a summary of their relative rankings we would give:

dlib: It comes first due to its robust performance, high accuracy, and versatility. It offers the ability to fine-tune models and has successfully detected 17 out of 18 individuals without false identifications.

Haar Cascade: While effective, it tends to produce more false positives by detecting unnecessary objects. However, it still performs well in many scenarios and can be suitable for certain applications.

MTCNN: MTCNN is also a strong performer, especially for face detection. It has a good detection rate and is suitable for a variety of object recognition tasks.

and then the other models.

Lessons from the Trenches 💡

Week one taught us valuable lessons:

The Power of Documentation

We realized the importance of comprehensive documentation. Some models had custom Python modules, but their documentation was either inadequate or outdated. We had to let go of a few models due to this.Dive Deeper into actual Code

Understanding the internals of a model may seem optional, but it's worth the time. We dug into the code to ensure the models' claims were valid.Resources at Your Fingertips

We discovered a treasure trove of resources:Papers with Code: A goldmine for comparing models.

Phind: A handy tool for coding queries (though it has a daily limit before you pay).

And the usual suspects, GitHub + YouTube.

Looking Ahead

Week one was challenging but rewarding. As we move forward, we anticipate that progress might slow down. Training the model from scratch can take hours, and we'll need to address issues like different image dimensions and data standardization.

Our goal for the upcoming week? Finalize the face detection model and move on to the face recognition model. We're ready to tackle edge cases and ensure every student is accurately detected.

Stay tuned for our next update as we continue our quest to build the ultimate Attendance Tracker app! ✨

P.S. 350+ people are following the Face Recognition Challenge by email. You should too, using our newsletter sign-up form.

Found our blog helpful? Share it with friends who might benefit from it and help us grow our community of learners and innovators!

The first week of our adventure was nothing short of exhilarating. We dove deep into the sea of research papers, each one offering a unique perspective on face recognition. Here's a glimpse of our voyage:

Navigating the Research Seas

We embarked on a quest to understand the landscape of face recognition technology. We delved into 7-8 research papers, each using different pre-trained models and promising varying degrees of accuracy. Our mission was to find the perfect combination for our app.

The Data Dilemma 🤯

Availability of quality data is the lifeblood of any machine learning project. During our journey, we encountered a bottleneck: obtaining sufficient photos for training and testing. We had to request several classroom photos from our client to test effectiveness of models. We also had to weigh the trade-off between model speed and accuracy.

Comparing Face Detection Models 🔍

We also prepared a presentation comparing the effectiveness of different face detection models. You can access the slides and our conclusion 📑 here.

FASTER R-CNN

Speedy but Less Accurate: The Faster RCNN model is known for its remarkable processing speed, making it one of the fastest models available. However, it suffers from a significant limitation in terms of accuracy when it comes to detecting students. Out of a total of 18 students, only 9 are successfully detected by the model. This means that approximately half of the students are missed or not identified by the model, resulting in a detection rate of around 50%. This lower accuracy can be a critical issue, particularly in applications like attendance tracking or security systems, where a high level of precision in student detection is essential to avoid missing individuals and ensuring the reliability of the system.

YOLO-V8

Versatile and Angle-aware: The YOLO v8 model, while slower than the Faster RCNN, offers enhanced customization options for optimizing its output, making it a versatile choice for various applications. It also exhibits angle-dependent capabilities, which can be valuable for tasks requiring precise understanding of object orientation or spatial relationships. In terms of face detection, the YOLO v8 model performs better than the Faster RCNN, successfully identifying 10 out of 18 faces. This improved detection rate positions it as a more effective choice for scenarios where accurate face detection is crucial, even though it may come at the cost of slightly slower processing speed compared to the Faster RCNN.

MTCNN

Versatile and Accurate: MTCNN's versatility in handling faces at different scales and orientations indeed makes it a valuable choice for various face detection applications. Its widespread use in facial recognition systems, video surveillance, and computer vision tasks highlights its effectiveness in scenarios where precise face detection is crucial.

The fact that MTCNN successfully identified 16 out of 18 faces, outperforming YOLOv8 in our specific case, underscores its reliability and accuracy in detecting faces. It's important to note that the performance of different face detection models can vary depending on the dataset, application, and specific requirements, so choosing the right model for the task at hand is essential. In your case, MTCNN has proven to be a strong performer for face detection.

HAAR CASCADE

Efficient but Limited: The Haar Cascade classifier's effectiveness in object detection, including face recognition, stems from its training on datasets with positive and negative examples to learn distinguishing patterns. It is computationally efficient, making it suitable for real-time applications like face recognition, eye detection, and pedestrian detection.

However, it's important to note that Haar Cascades have limitations. While they perform well in many cases, they can struggle with certain edge cases, such as detecting faces when a person is facing downwards. Tackling these specific scenarios may require further testing and fine-tuning of the classifier.

One common drawback of Haar Cascades is that they can produce false positives, meaning they may detect objects or patterns that are not of interest. This can happen when the learned patterns in the classifier resemble features found in unrelated objects or backgrounds. Careful parameter tuning and post-processing techniques are often necessary to reduce false positives. It has successfully detected 17 faces out of 18.

In summary, Haar Cascade offers computational efficiency and can be effective in object detection tasks, but it may require additional adjustments to handle specific edge cases and minimize false detections, especially in scenarios where precise object recognition is essential, but it results in better accuracy than MTCNN model.

DLIB HOG

Effective and Reliable: Dlib's use of the HOG descriptor is highly effective for detecting faces by learning facial patterns from a training dataset.

Moreover, the versatility of HOG extends beyond faces; it can be applied to various object detection tasks with suitable training data. HOG is celebrated for its simplicity, efficiency, and robustness, making it a valuable asset in computer vision applications.

For our application, the HOG-based model excels by successfully detecting 17 out of 18 individuals, demonstrating its accuracy and reliability. While some fine-tuning and testing are required for addressing edge cases, it stands out for its ability to avoid false identifications, ensuring precise object recognition.

SUMMARY:

To conclude, it appears that dlib, Haar Cascade, and MTCNN have performed well in our specific application for object detection, particularly for tasks like face recognition.

Here's a summary of their relative rankings we would give:

dlib: It comes first due to its robust performance, high accuracy, and versatility. It offers the ability to fine-tune models and has successfully detected 17 out of 18 individuals without false identifications.

Haar Cascade: While effective, it tends to produce more false positives by detecting unnecessary objects. However, it still performs well in many scenarios and can be suitable for certain applications.

MTCNN: MTCNN is also a strong performer, especially for face detection. It has a good detection rate and is suitable for a variety of object recognition tasks.

and then the other models.

Lessons from the Trenches 💡

Week one taught us valuable lessons:

The Power of Documentation

We realized the importance of comprehensive documentation. Some models had custom Python modules, but their documentation was either inadequate or outdated. We had to let go of a few models due to this.Dive Deeper into actual Code

Understanding the internals of a model may seem optional, but it's worth the time. We dug into the code to ensure the models' claims were valid.Resources at Your Fingertips

We discovered a treasure trove of resources:Papers with Code: A goldmine for comparing models.

Phind: A handy tool for coding queries (though it has a daily limit before you pay).

And the usual suspects, GitHub + YouTube.

Looking Ahead

Week one was challenging but rewarding. As we move forward, we anticipate that progress might slow down. Training the model from scratch can take hours, and we'll need to address issues like different image dimensions and data standardization.

Our goal for the upcoming week? Finalize the face detection model and move on to the face recognition model. We're ready to tackle edge cases and ensure every student is accurately detected.

Stay tuned for our next update as we continue our quest to build the ultimate Attendance Tracker app! ✨

P.S. 350+ people are following the Face Recognition Challenge by email. You should too, using our newsletter sign-up form.

Found our blog helpful? Share it with friends who might benefit from it and help us grow our community of learners and innovators!