Your Database, Your Language: Simplify SQL with LLaMA

May 30, 2024

May 30, 2024

May 30, 2024

Introduction to LLaMA for database querying

Querying databases using structured query languages like SQL can be a real headache, especially for non-technical users. It's like trying to order at a foreign restaurant —you know what you want, but you just can’t get the words right! This is where LLaMA (LlamaIndex) comes in like a superhero with a cape made of code, allowing users to query databases using natural language. Imagine being able to ask your database a question like you're talking to a friend, instead of trying to remember how to write a SELECT statement.

LLaMA, which stands for Language Library and Machine Learning for Analysis, is a powerful toolkit that combines language models with database indexing capabilities. It enables users to ask questions in plain English (or other natural languages) and retrieve relevant information from databases without having to write complex SQL queries.

Finally, you can get the answers you need without feeling like you need a degree in computer science!

Watch the Demo

How LLaMA works for understanding and translating natural language to SQL

Data Ingestion: LLaMA first ingests the database schema and data into its index. This can be done by connecting to the database directly or by loading data from CSV/JSON files.

Embedding Generation: LLaMA uses a language model, such as GPT or LLaMA, to generate embeddings (numerical representations) of the database schema, table names, column names, and data values.

Index Creation: LLaMA then creates an index over the embedded data, allowing for efficient vector similarity searches.

Query Processing: When a user enters a natural language query, LLaMA processes the query using the language model to generate embeddings of the query text.

Vector Search: LLaMA performs a vector similarity search over the index to find the most relevant database elements (tables, columns, and values) that match the query embeddings.

Response Synthesis: Finally, LLaMA synthesizes a response by combining the relevant information retrieved from the index, potentially involving additional processing by the language model

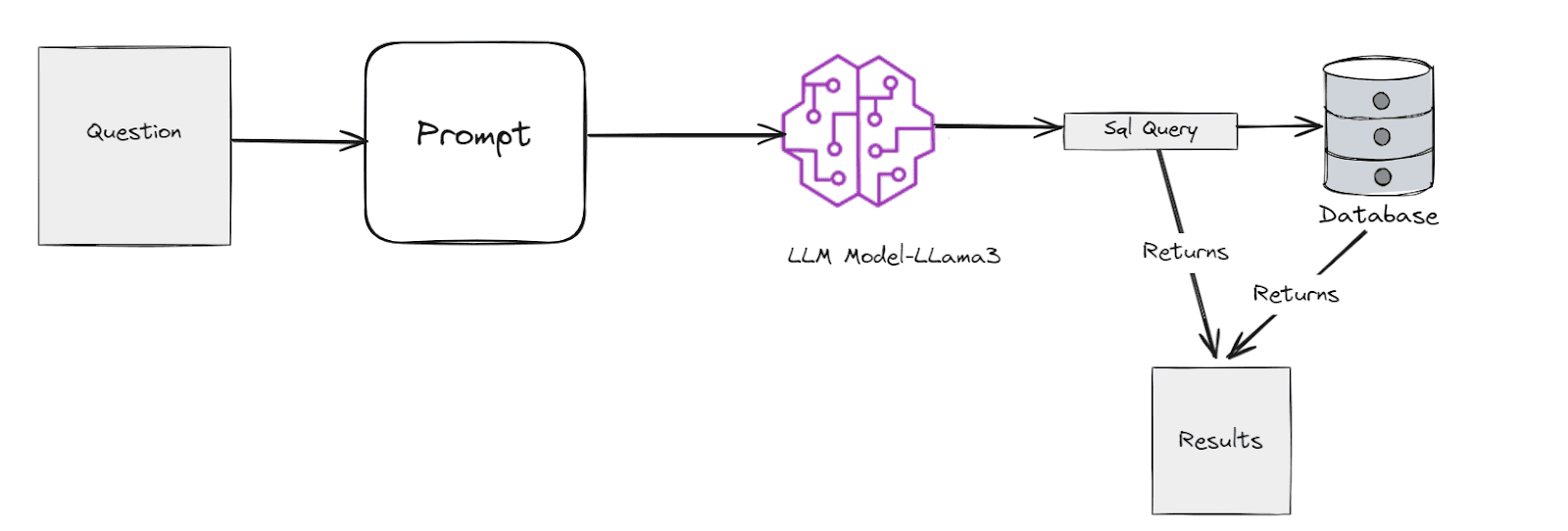

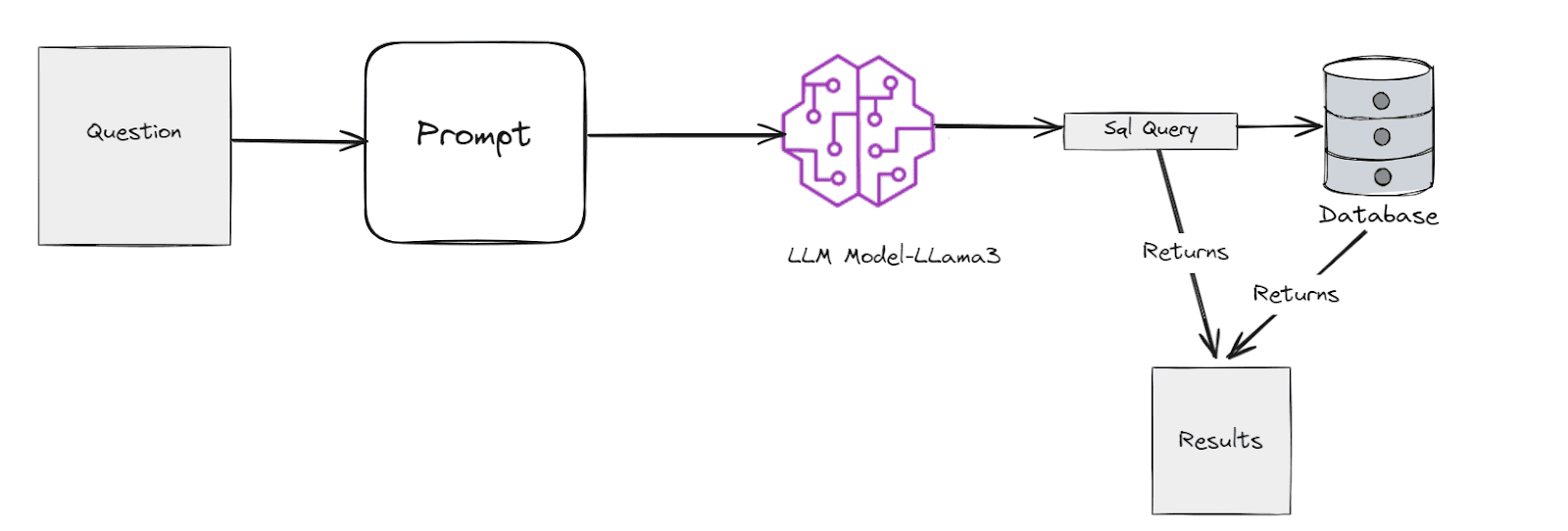

Architecture Diagram

Handling ambiguity in LLaMA queries

Ambiguity is a significant challenge for LLaMA (LlamaIndex) because it can lead to incorrect or incomplete responses, reducing the system's reliability and usefulness. When a query is ambiguous, it means that there are multiple possible interpretations of what the user is asking. This ambiguity can arise from unclear language, polysemous terms (words with multiple meanings), or insufficient context.

Handling ambiguity in LLaMA queries is crucial for accurate responses:

Query Refinement: LLaMA interacts with users to clarify queries, asking follow-up questions to understand intent better.

Entity Disambiguation: It uses context to distinguish between similar entities (e.g., product names) in queries, ensuring correct interpretations.

Query Rewriting: LLaMA rephrases queries for clarity, expanding abbreviations or resolving vague language to improve understanding.

Domain-Specific Fine-tuning: By learning domain-specific data, LLaMA enhances its ability to interpret queries accurately within specific contexts.

Confidence Scoring: LLaMA assigns confidence scores to responses, indicating the reliability of its interpretations and suggesting alternatives if unsure.

User Feedback Integration: It learns from user input over time to refine its responses, incorporating corrections to improve accuracy.

External Knowledge Integration: LLaMA integrates with external knowledge sources to enrich responses, resolving ambiguities using additional context and information.

Let's talk benefits…

Using LLaMA for SQL querying offers several significant benefits. It improves accessibility by allowing non-technical users to query data using natural language, making the process more inclusive. This approach also boosts productivity, saving time and effort by eliminating the need for complex SQL queries. With LLaMA, all users can access and query data consistently, enhancing collaboration and reducing errors.

Additionally, it supports data governance and security by enabling access controls at the natural language query level, ensuring authorized data retrieval. LLaMA can integrate with existing systems like BI tools or custom applications, fitting seamlessly into familiar workflows. Finally, it empowers domain experts with limited SQL skills to explore and analyze data using their expertise, facilitating deeper insights.

Conclusion

Incorporating LLaMA (LlamaIndex) for querying databases using natural language significantly enhances data accessibility and usability. By allowing users to interact with databases in plain English, LLaMA eliminates the complexity of writing SQL queries, making data retrieval more intuitive and efficient.

For instance, a sales manager who needs to find the top-performing products in the last quarter can simply ask, "What were our best-selling products in Q1?" instead of constructing a complex SQL query. Similarly, a human resources professional seeking employee attendance records can query, "Show me the attendance for all employees in June," and get immediate results without needing technical SQL knowledge.

In conclusion, leveraging LLaMA for natural language queries transforms how enterprises interact with their databases, making data retrieval more accessible, efficient, and user-friendly. This innovation marks a significant step towards smarter and more inclusive data management, enabling better decision-making across various domains.

Sources

Introduction to LLaMA for database querying

Querying databases using structured query languages like SQL can be a real headache, especially for non-technical users. It's like trying to order at a foreign restaurant —you know what you want, but you just can’t get the words right! This is where LLaMA (LlamaIndex) comes in like a superhero with a cape made of code, allowing users to query databases using natural language. Imagine being able to ask your database a question like you're talking to a friend, instead of trying to remember how to write a SELECT statement.

LLaMA, which stands for Language Library and Machine Learning for Analysis, is a powerful toolkit that combines language models with database indexing capabilities. It enables users to ask questions in plain English (or other natural languages) and retrieve relevant information from databases without having to write complex SQL queries.

Finally, you can get the answers you need without feeling like you need a degree in computer science!

Watch the Demo

How LLaMA works for understanding and translating natural language to SQL

Data Ingestion: LLaMA first ingests the database schema and data into its index. This can be done by connecting to the database directly or by loading data from CSV/JSON files.

Embedding Generation: LLaMA uses a language model, such as GPT or LLaMA, to generate embeddings (numerical representations) of the database schema, table names, column names, and data values.

Index Creation: LLaMA then creates an index over the embedded data, allowing for efficient vector similarity searches.

Query Processing: When a user enters a natural language query, LLaMA processes the query using the language model to generate embeddings of the query text.

Vector Search: LLaMA performs a vector similarity search over the index to find the most relevant database elements (tables, columns, and values) that match the query embeddings.

Response Synthesis: Finally, LLaMA synthesizes a response by combining the relevant information retrieved from the index, potentially involving additional processing by the language model

Architecture Diagram

Handling ambiguity in LLaMA queries

Ambiguity is a significant challenge for LLaMA (LlamaIndex) because it can lead to incorrect or incomplete responses, reducing the system's reliability and usefulness. When a query is ambiguous, it means that there are multiple possible interpretations of what the user is asking. This ambiguity can arise from unclear language, polysemous terms (words with multiple meanings), or insufficient context.

Handling ambiguity in LLaMA queries is crucial for accurate responses:

Query Refinement: LLaMA interacts with users to clarify queries, asking follow-up questions to understand intent better.

Entity Disambiguation: It uses context to distinguish between similar entities (e.g., product names) in queries, ensuring correct interpretations.

Query Rewriting: LLaMA rephrases queries for clarity, expanding abbreviations or resolving vague language to improve understanding.

Domain-Specific Fine-tuning: By learning domain-specific data, LLaMA enhances its ability to interpret queries accurately within specific contexts.

Confidence Scoring: LLaMA assigns confidence scores to responses, indicating the reliability of its interpretations and suggesting alternatives if unsure.

User Feedback Integration: It learns from user input over time to refine its responses, incorporating corrections to improve accuracy.

External Knowledge Integration: LLaMA integrates with external knowledge sources to enrich responses, resolving ambiguities using additional context and information.

Let's talk benefits…

Using LLaMA for SQL querying offers several significant benefits. It improves accessibility by allowing non-technical users to query data using natural language, making the process more inclusive. This approach also boosts productivity, saving time and effort by eliminating the need for complex SQL queries. With LLaMA, all users can access and query data consistently, enhancing collaboration and reducing errors.

Additionally, it supports data governance and security by enabling access controls at the natural language query level, ensuring authorized data retrieval. LLaMA can integrate with existing systems like BI tools or custom applications, fitting seamlessly into familiar workflows. Finally, it empowers domain experts with limited SQL skills to explore and analyze data using their expertise, facilitating deeper insights.

Conclusion

Incorporating LLaMA (LlamaIndex) for querying databases using natural language significantly enhances data accessibility and usability. By allowing users to interact with databases in plain English, LLaMA eliminates the complexity of writing SQL queries, making data retrieval more intuitive and efficient.

For instance, a sales manager who needs to find the top-performing products in the last quarter can simply ask, "What were our best-selling products in Q1?" instead of constructing a complex SQL query. Similarly, a human resources professional seeking employee attendance records can query, "Show me the attendance for all employees in June," and get immediate results without needing technical SQL knowledge.

In conclusion, leveraging LLaMA for natural language queries transforms how enterprises interact with their databases, making data retrieval more accessible, efficient, and user-friendly. This innovation marks a significant step towards smarter and more inclusive data management, enabling better decision-making across various domains.