Smart Choices in AI: When to RAG, When to Fine-Tune

Mar 29, 2024

Mar 29, 2024

Mar 29, 2024

From Basic to Brilliant: The AI Application Revolution

The leap from basic, one-size-fits-all AI to truly customized and dynamic solutions has been nothing short of revolutionary, largely due to the prowess of Large Language Models (LLMs). But the real magic happens when we sprinkle in some extra tricks—like fine-tuning or diving into the wizardry of Retrieval-Augmented Generation (RAG).

Choosing between RAG and fine-tuning isn't just about picking a tool; it's about making a strategic decision that could make or break your AI application’s performance. So, what’s the smart move?

To RAG or Fine-tune, that is the question!

LLMs fell short?

Enhancing existing LLMs with techniques like RAG or fine-tuning is essential to tailor AI applications to specific needs. While LLMs are powerful, they often lack domain-specific knowledge or up-to-date information.

RAG helps integrate real-time data and external knowledge, keeping responses relevant and current. Fine-tuning, on the other hand, customizes the model to align with particular styles, domains, or user feedback, ensuring higher accuracy and relevance.

What Is Retrieval Augmented Generation, or RAG?

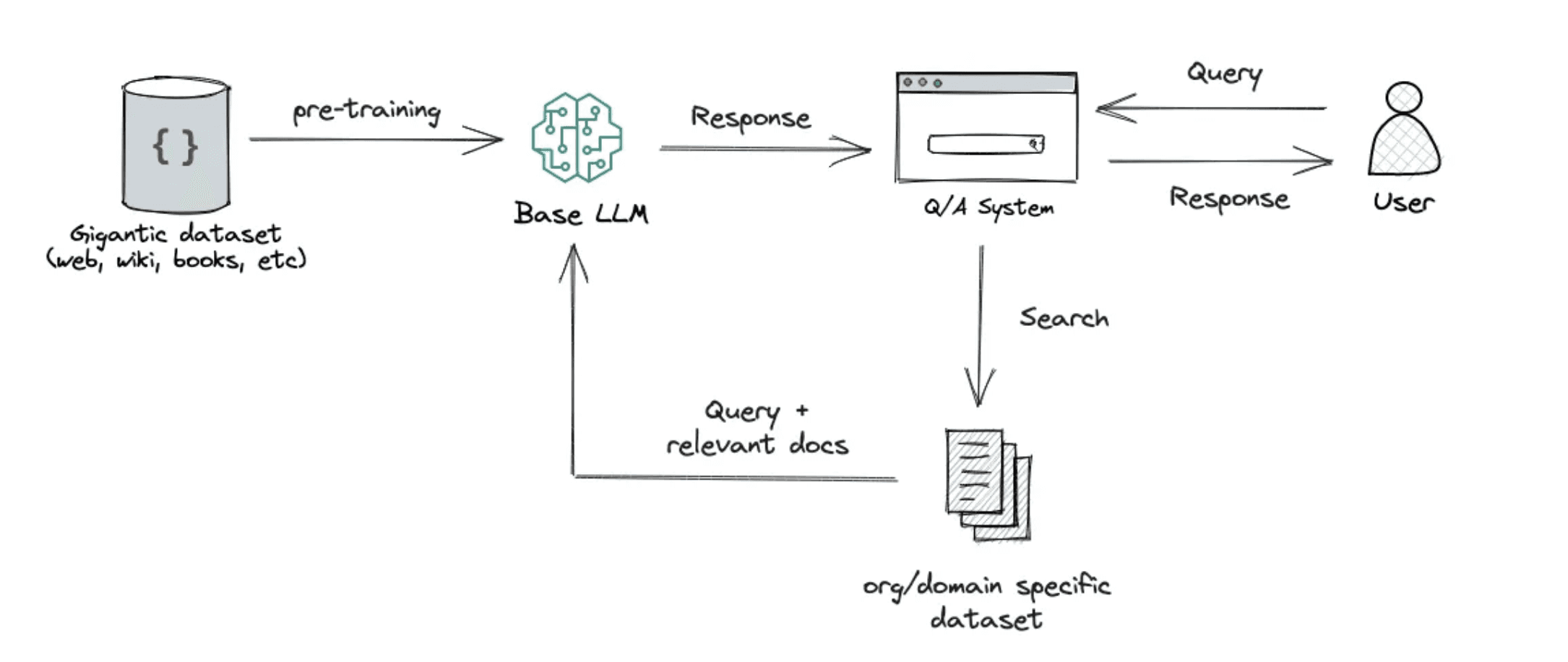

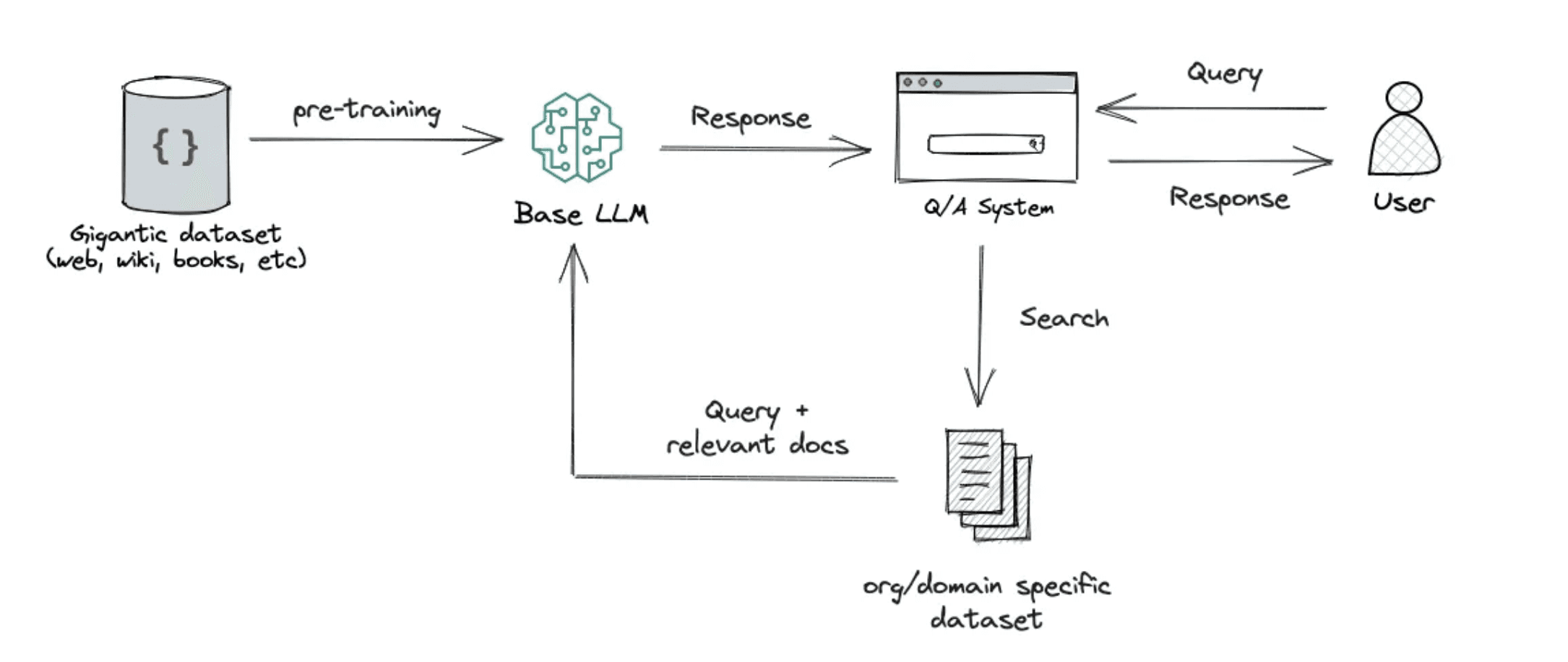

RAG introduces a dynamic twist to traditional training. It acts as a turbo-boost for LLMs, allowing them to tap into an external knowledge base in real-time to enhance their responses.

When an LLM equipped with RAG receives a query, it doesn’t just draw from its extensive training. Instead, it pulls in relevant information from outside documents, providing a richer and more accurate output. For instance, a RAG-enabled support chatbot isn’t confined to its initial programming; it can fetch the latest information to address inquiries with precision, much like a researcher accessing updated resources.

By marrying the LLM’s foundational knowledge with the agility of information retrieval, RAG transforms AI systems into even more powerful tools, capable of providing informed and contextually relevant answers.

What is Fine-Tuning?

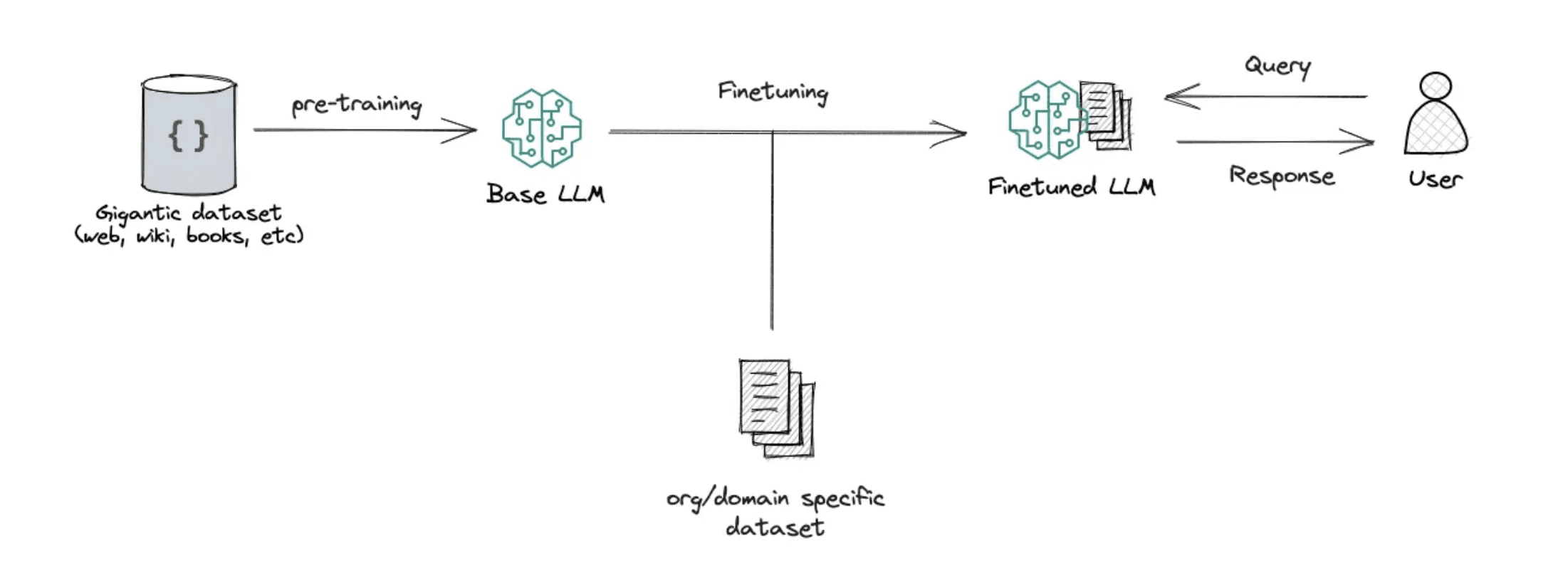

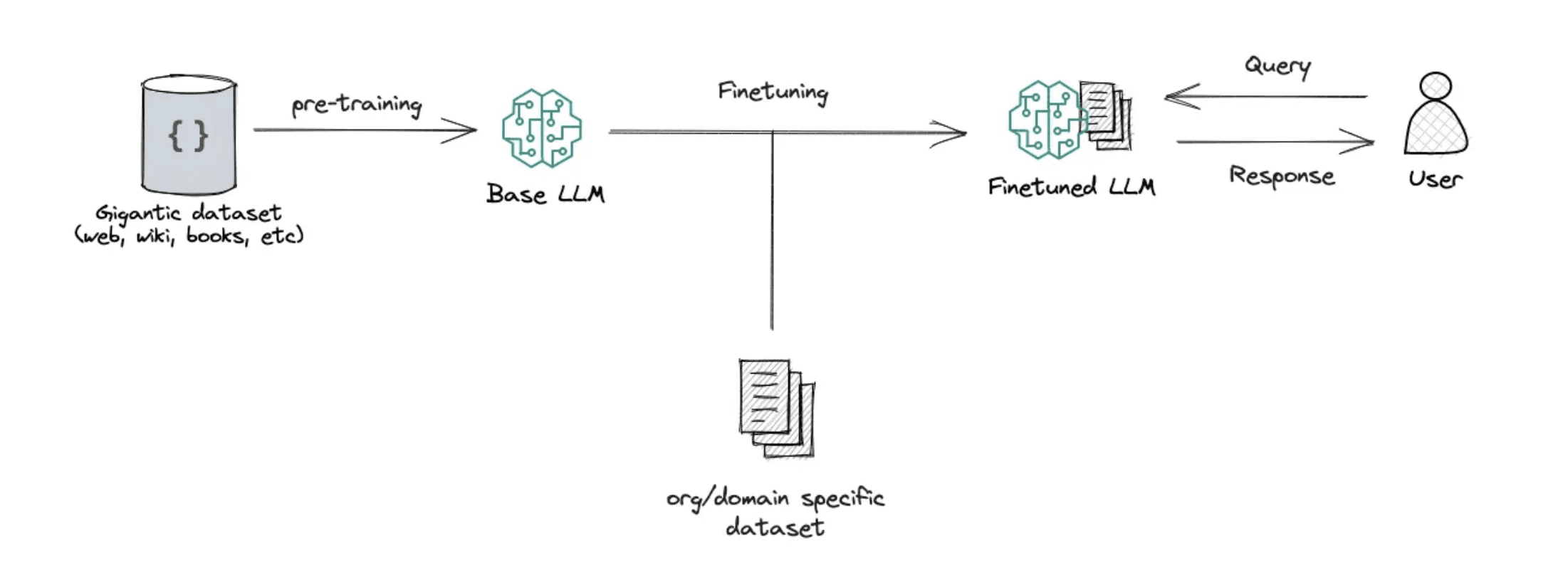

Fine-tuning is like giving a well-trained athlete specialized coaching to excel even more in their sport. With a large language model (LLM) that has already learned a vast amount from a huge dataset, fine-tuning is the next step to boost its performance. Rather than learning from scratch, it's about honing the model's skills to make it more adept at specific tasks. Fine-tuning is applied when one wants the LLM's behavior to change, or to learn a different "language."

In practical terms, fine-tuning tweaks the LLM's settings—its weights and parameters—using a set of detailed, task-specific data. This specialized training makes the model a pro in areas you need it to be, from understanding industry jargon to answering complex queries with expertise.

When to Use RAG Over Fine-Tuning?

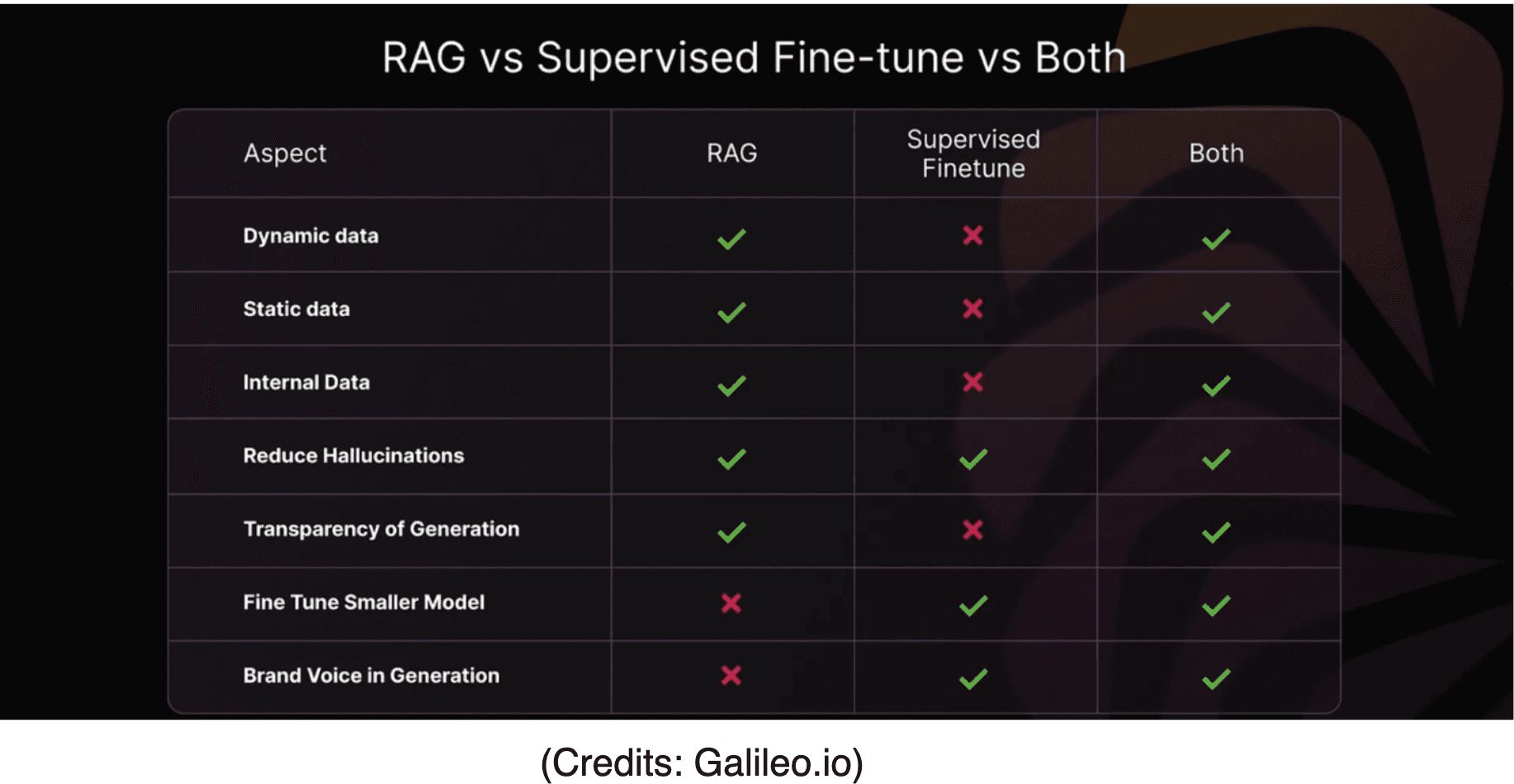

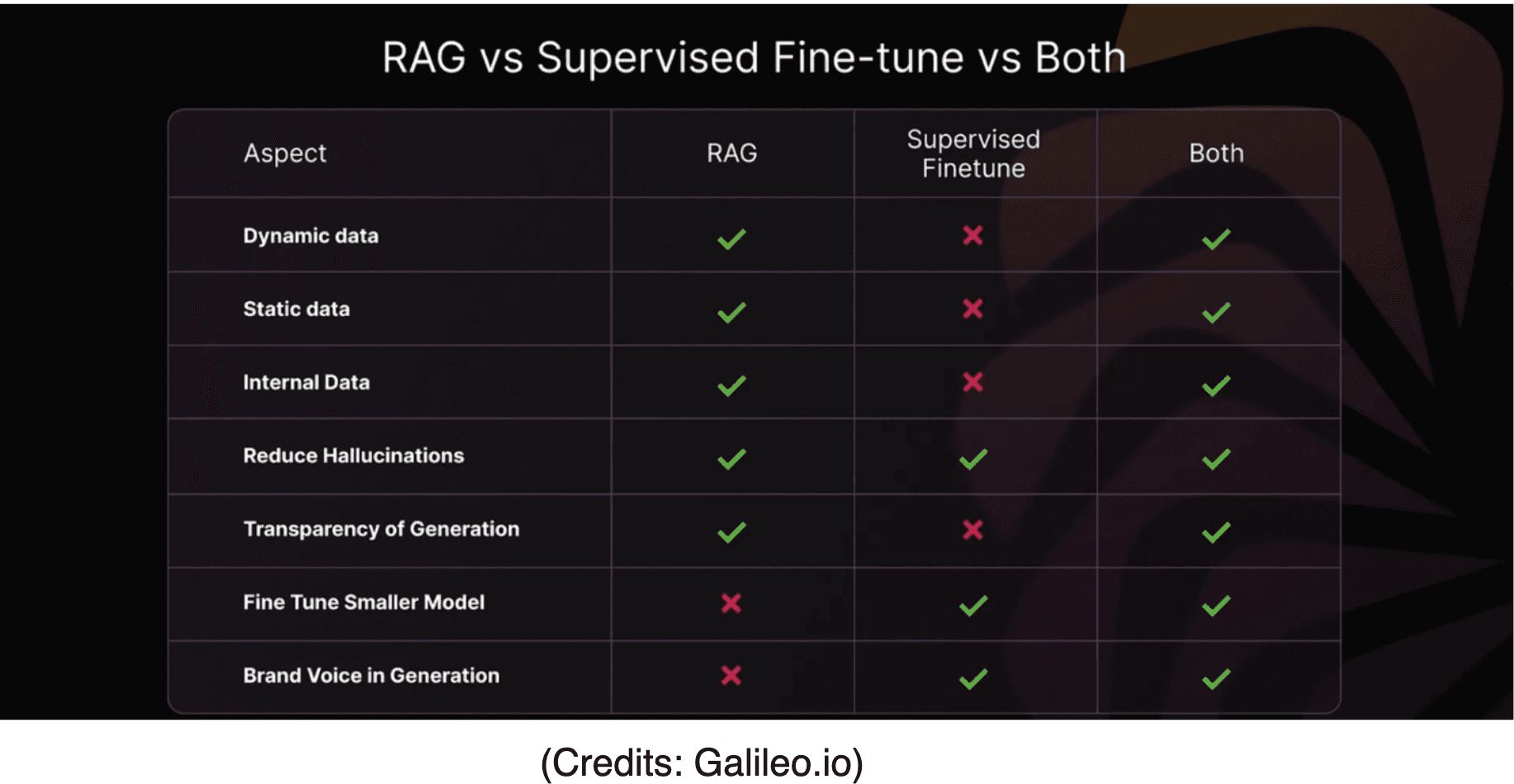

Deciding whether to employ Retrieval-Augmented Generation (RAG) or fine-tuning hinges on the specific optimization needs of your Large Language Model (LLM) applications. Each method hones the AI's abilities in distinct ways.

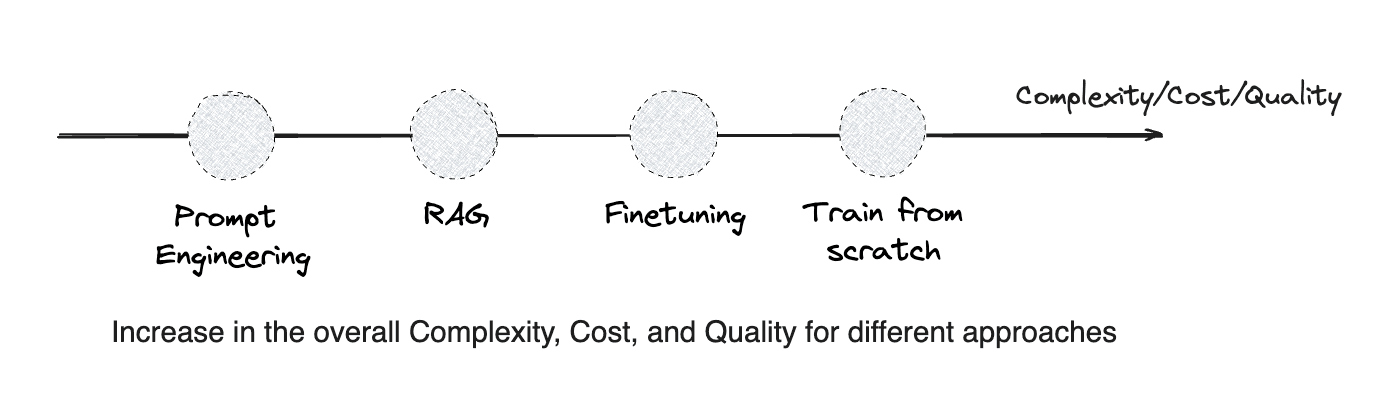

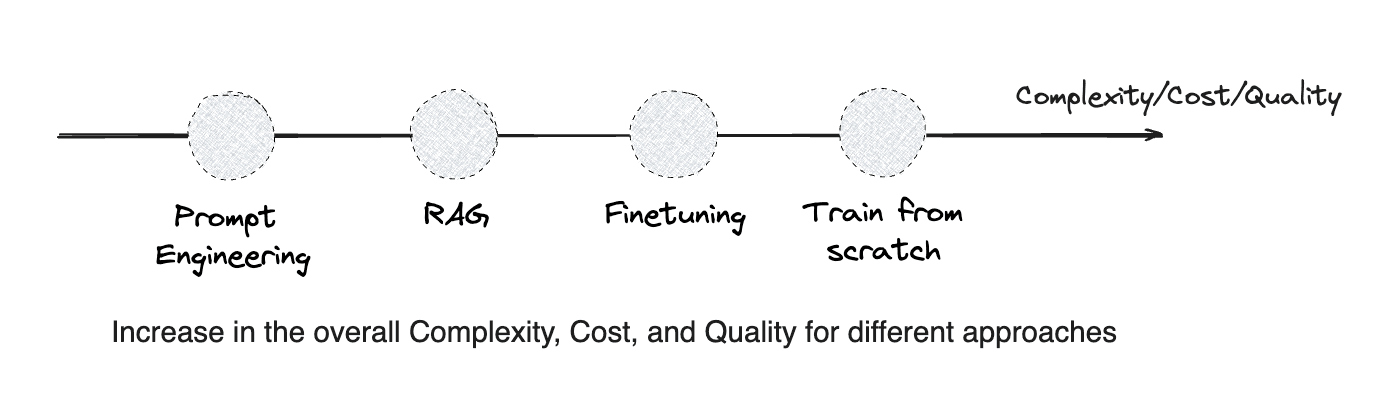

At first glance, RAG might seem the obvious choice for its simplicity and lower cost. We used to illustrate this with simple diagrams, thinking RAG was the go-to unless it fell short, in which case fine-tuning would step in.

But, it's not that straightforward. RAG and fine-tuning are not just points on a cost-complexity spectrum. They're distinct processes designed to address different needs. The real question is which method best suits the specific tasks of your AI.

However, such diagrams can oversimplify the relationship between these two techniques. RAG and fine-tuning don't just fall on a linear scale of complexity and cost; they're orthogonal, addressing different LLM application needs. The choice between them is less about budget and complexity, and more about which process aligns with your AI’s specific tasks.

Factors to Consider When Evaluating Fine-Tuning and RAG

In comparison to training from scratch, RAG stands out for its time and resource efficiency. It's cost-effective and flexible, enabling chatbots to scale with growing data sets, thus serving evolving business needs efficiently.

Use cases for RAG and fine-tuning

RAG: Imagine a healthcare chatbot tasked with answering patient queries. RAG allows the chatbot to fetch real-time medical research articles and integrate the latest findings into its responses. This ensures patients receive the most current and accurate information about treatments and conditions.

Fine-tuning: Consider a financial institution using an AI for sentiment analysis of customer reviews. Fine-tuning allows the AI to learn specific nuances of financial language and customer sentiment, improving its ability to accurately gauge customer satisfaction and sentiment trends over time. This customization ensures the AI can adapt to the unique needs of the financial industry.

Conclusion: Tailoring Your Approach for AI Excellence

Navigating the choice between RAG and fine-tuning isn't about finding a universal fix but aligning strategy with your LLM's specific needs. Each method has its strengths, and in some cases, combining them may offer the best results. It's critical to match the tool to the task, as misalignment can stall progress, while the right choice can significantly enhance performance.

The decision must be made with care… not all methods are created equal, and they are certainly not interchangeable. With thoughtful selection and execution, the right approach—whether RAG, fine-tuning, or both can fully unlock the potential of your LLM, leading to more effective and intelligent AI applications.

From Basic to Brilliant: The AI Application Revolution

The leap from basic, one-size-fits-all AI to truly customized and dynamic solutions has been nothing short of revolutionary, largely due to the prowess of Large Language Models (LLMs). But the real magic happens when we sprinkle in some extra tricks—like fine-tuning or diving into the wizardry of Retrieval-Augmented Generation (RAG).

Choosing between RAG and fine-tuning isn't just about picking a tool; it's about making a strategic decision that could make or break your AI application’s performance. So, what’s the smart move?

To RAG or Fine-tune, that is the question!

LLMs fell short?

Enhancing existing LLMs with techniques like RAG or fine-tuning is essential to tailor AI applications to specific needs. While LLMs are powerful, they often lack domain-specific knowledge or up-to-date information.

RAG helps integrate real-time data and external knowledge, keeping responses relevant and current. Fine-tuning, on the other hand, customizes the model to align with particular styles, domains, or user feedback, ensuring higher accuracy and relevance.

What Is Retrieval Augmented Generation, or RAG?

RAG introduces a dynamic twist to traditional training. It acts as a turbo-boost for LLMs, allowing them to tap into an external knowledge base in real-time to enhance their responses.

When an LLM equipped with RAG receives a query, it doesn’t just draw from its extensive training. Instead, it pulls in relevant information from outside documents, providing a richer and more accurate output. For instance, a RAG-enabled support chatbot isn’t confined to its initial programming; it can fetch the latest information to address inquiries with precision, much like a researcher accessing updated resources.

By marrying the LLM’s foundational knowledge with the agility of information retrieval, RAG transforms AI systems into even more powerful tools, capable of providing informed and contextually relevant answers.

What is Fine-Tuning?

Fine-tuning is like giving a well-trained athlete specialized coaching to excel even more in their sport. With a large language model (LLM) that has already learned a vast amount from a huge dataset, fine-tuning is the next step to boost its performance. Rather than learning from scratch, it's about honing the model's skills to make it more adept at specific tasks. Fine-tuning is applied when one wants the LLM's behavior to change, or to learn a different "language."

In practical terms, fine-tuning tweaks the LLM's settings—its weights and parameters—using a set of detailed, task-specific data. This specialized training makes the model a pro in areas you need it to be, from understanding industry jargon to answering complex queries with expertise.

When to Use RAG Over Fine-Tuning?

Deciding whether to employ Retrieval-Augmented Generation (RAG) or fine-tuning hinges on the specific optimization needs of your Large Language Model (LLM) applications. Each method hones the AI's abilities in distinct ways.

At first glance, RAG might seem the obvious choice for its simplicity and lower cost. We used to illustrate this with simple diagrams, thinking RAG was the go-to unless it fell short, in which case fine-tuning would step in.

But, it's not that straightforward. RAG and fine-tuning are not just points on a cost-complexity spectrum. They're distinct processes designed to address different needs. The real question is which method best suits the specific tasks of your AI.

However, such diagrams can oversimplify the relationship between these two techniques. RAG and fine-tuning don't just fall on a linear scale of complexity and cost; they're orthogonal, addressing different LLM application needs. The choice between them is less about budget and complexity, and more about which process aligns with your AI’s specific tasks.

Factors to Consider When Evaluating Fine-Tuning and RAG

In comparison to training from scratch, RAG stands out for its time and resource efficiency. It's cost-effective and flexible, enabling chatbots to scale with growing data sets, thus serving evolving business needs efficiently.

Use cases for RAG and fine-tuning

RAG: Imagine a healthcare chatbot tasked with answering patient queries. RAG allows the chatbot to fetch real-time medical research articles and integrate the latest findings into its responses. This ensures patients receive the most current and accurate information about treatments and conditions.

Fine-tuning: Consider a financial institution using an AI for sentiment analysis of customer reviews. Fine-tuning allows the AI to learn specific nuances of financial language and customer sentiment, improving its ability to accurately gauge customer satisfaction and sentiment trends over time. This customization ensures the AI can adapt to the unique needs of the financial industry.

Conclusion: Tailoring Your Approach for AI Excellence

Navigating the choice between RAG and fine-tuning isn't about finding a universal fix but aligning strategy with your LLM's specific needs. Each method has its strengths, and in some cases, combining them may offer the best results. It's critical to match the tool to the task, as misalignment can stall progress, while the right choice can significantly enhance performance.

The decision must be made with care… not all methods are created equal, and they are certainly not interchangeable. With thoughtful selection and execution, the right approach—whether RAG, fine-tuning, or both can fully unlock the potential of your LLM, leading to more effective and intelligent AI applications.