How to Choose the Right LLM for Your Organization

May 14, 2024

May 14, 2024

May 14, 2024

Intro

In the rapidly evolving landscape of artificial intelligence, choosing the right Large Language Model (LLM) for your business can be a daunting task. With numerous options available, each boasting unique capabilities and pricing structures, it's essential to make an informed decision that aligns with your organization's needs.

By understanding their strengths, weaknesses, and best use cases, technical decision-makers can leverage these insights to implement the most suitable AI solutions for their enterprises.

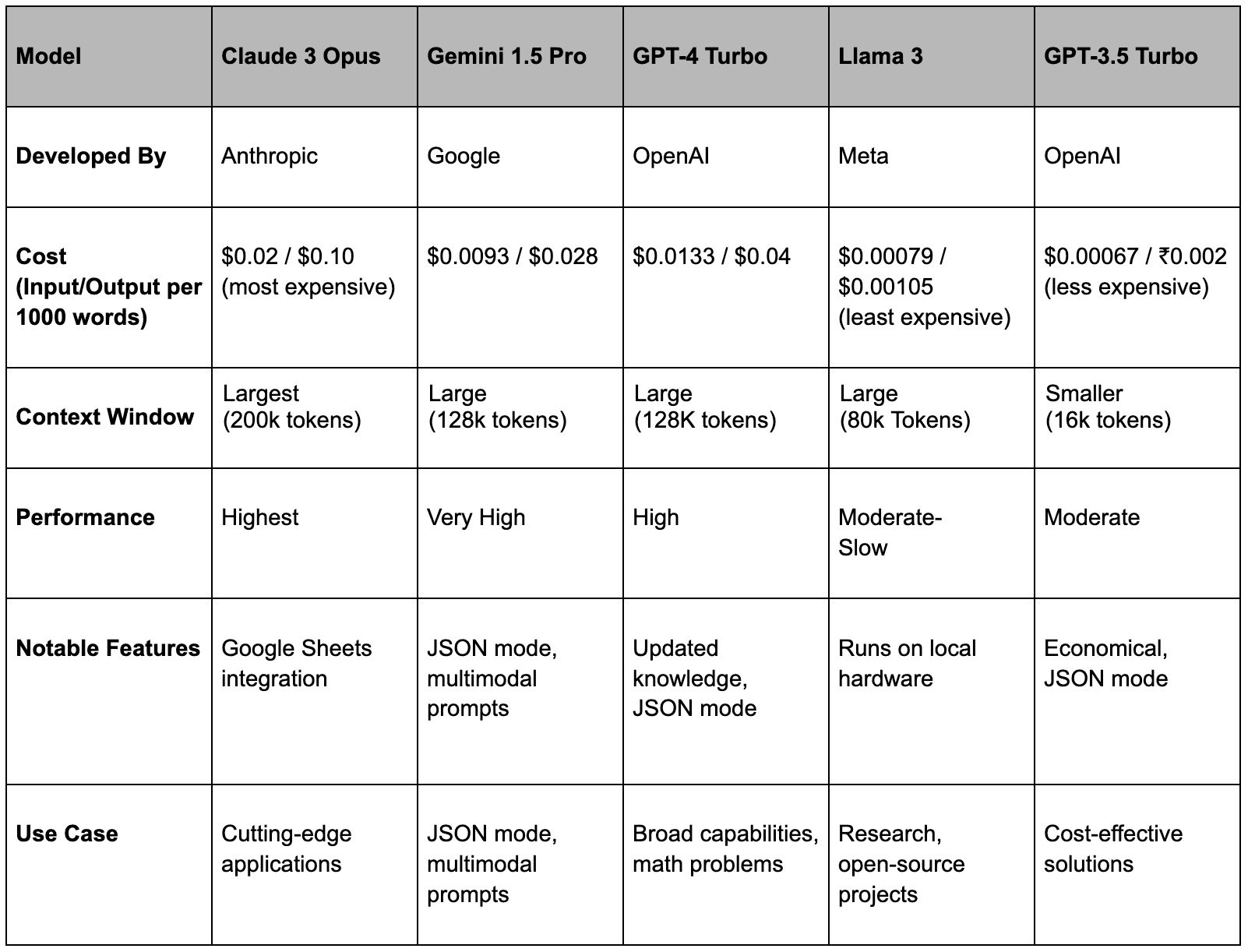

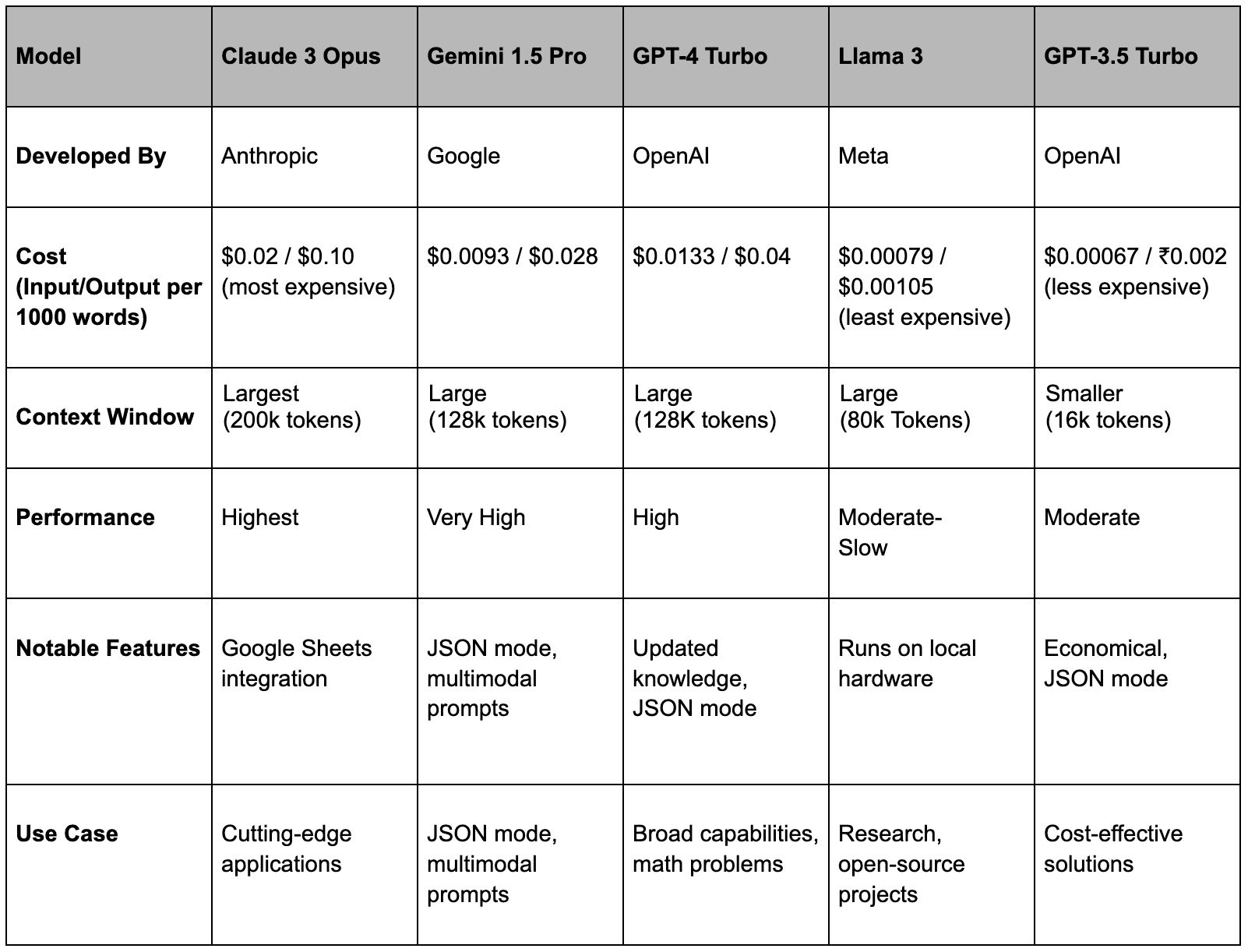

Quick Comparision of Different LLMs

This table provides a quick snapshot of the key aspects of each model, helping you to quickly compare and identify which LLM might be the best fit for your needs.

Note: Please check the latest pricing details from the official sources.

Understanding the Key Concepts

Before we dive into the models themselves, we’ll go over some basic definitions of the terms you will hear about often when it comes to LLMs. This will help you understand what’s being compared:

Tokens:

Tokens are units of data, typically words or sub-word units like parts of words, single characters, or punctuation marks. These are the fundamental elements language models use to process and generate natural language.

Input Token:

An input token is a unit of data provided to a system for processing. It can represent symbols, keywords, identifiers, literals, words, phrases, or symbols depending on the context. Input tokens initiate actions, computations, or analyses within a system.

Output Token:

An output token is a unit of data produced by a system as a result of processing input. It represents the outcome of a computation, analysis, or transformation conducted within the system.

Context Window

A context window is the textual range around a target token that an LLM can process at one time. It acts as the "working memory" of the model, defining the limit of how much prior context it can leverage. If a prompt exceeds the context window, the portion outside this window will be ignored.

Performance

Performance refers to how effectively and accurately the model can understand and generate human-like language across various tasks. High performance indicates the model's ability to produce coherent and contextually appropriate responses.

LLM Models and Their Ideal Use Cases

Claude 3 Opus

Claude 3 by Anthropic has revolutionized the LLM landscape since its release in March 2024. Scoring 84.83% on the leaderboard, it outperforms other models significantly. Its notable feature includes Google Sheets integration, which is highly useful for non-coding applications. However, this high performance comes at a steep cost.

Use Case: Ideal for applications requiring high performance and unique integrations.

Gemini 1.5 Pro

Google's Gemini 1.5 Pro, released as a competitor to GPT-4, offers a large context window and competitive pricing. It outperforms GPT-4 on the leaderboard and includes features like JSON mode and multimodal prompts.

Use Case: Suitable for applications requiring extensive context and multimodal prompts.

GPT-4

GPT-4 Turbo, recently released, is more economical and up-to-date than GPT-4, offering a larger context window and updated knowledge. It is widely used due to its broad capabilities and robust community support.

Use Case: Best for applications needing broad capabilities and strong community support.

LLama 3

Developed by Meta, Llama 3 is the most capable open-source LLM. It can be run locally, making it economical and flexible, though its performance can be slow.

Use Case: Ideal for research and open-source projects where cost is a critical factor.

Gpt 3.5

GPT-3.5 Turbo remains relevant for cost-effective solutions. It offers JSON mode and is economical, though it has a smaller context window and moderate performance.

Use Case: Suitable for cost-effective solutions without the need for the latest features.

Comparing Top LLMs across 3 different tasks

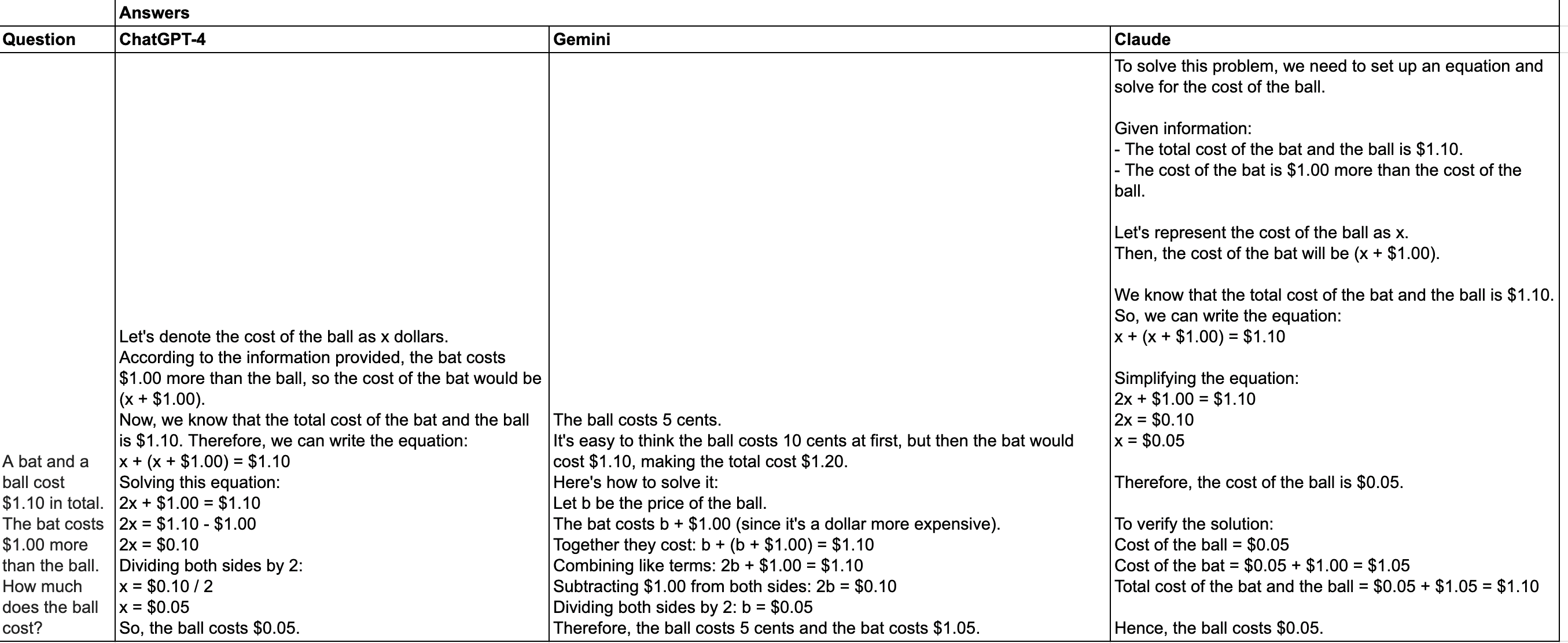

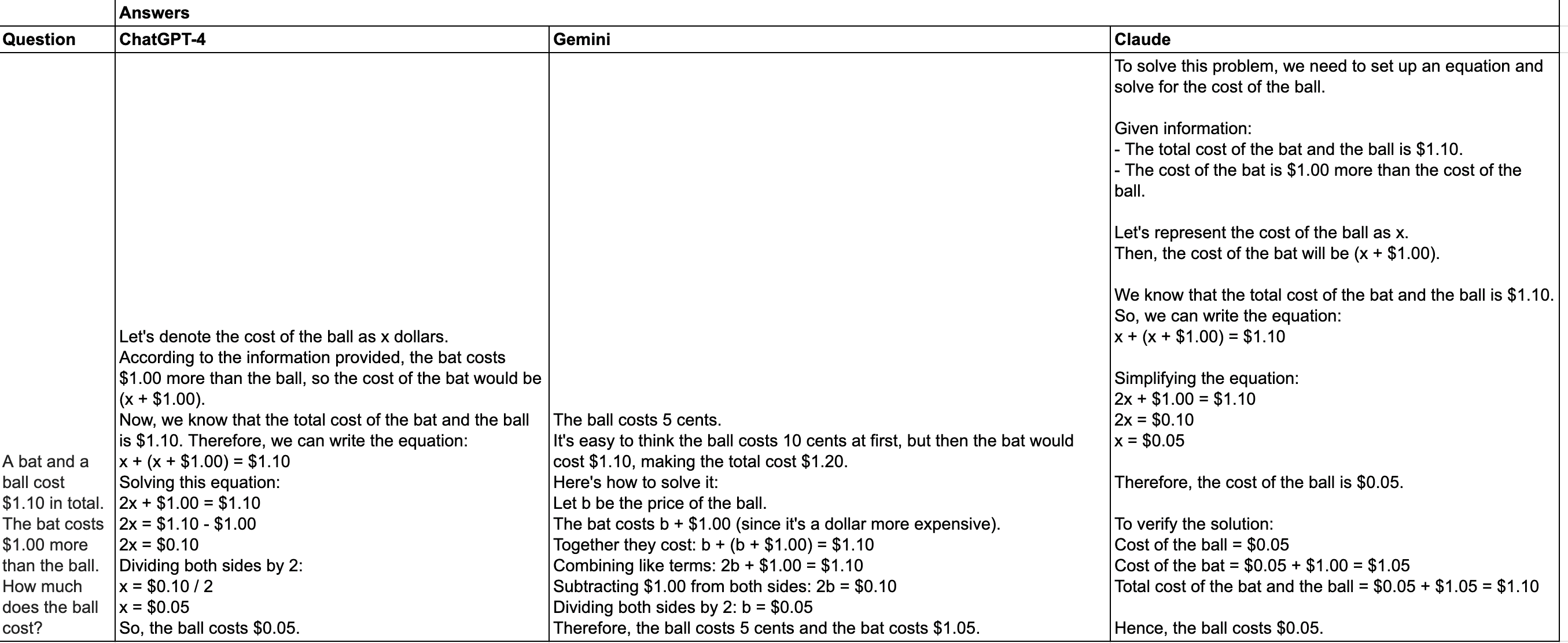

Mathematical task: Solve the problem of a bat and a ball costing $1.10 in total with the bat costing $1.00 more than the ball.

GPT-4: Explains the solution step-by-step, thoroughly breaks down the problem and verifies the solution.

Gemini: The response is concise, directly providing the solution without a detailed explanation.

Claude: Offers a detailed solution using algebra, similar to GPT-4, but with a different approach to explaining the steps. It includes verification of the solution.

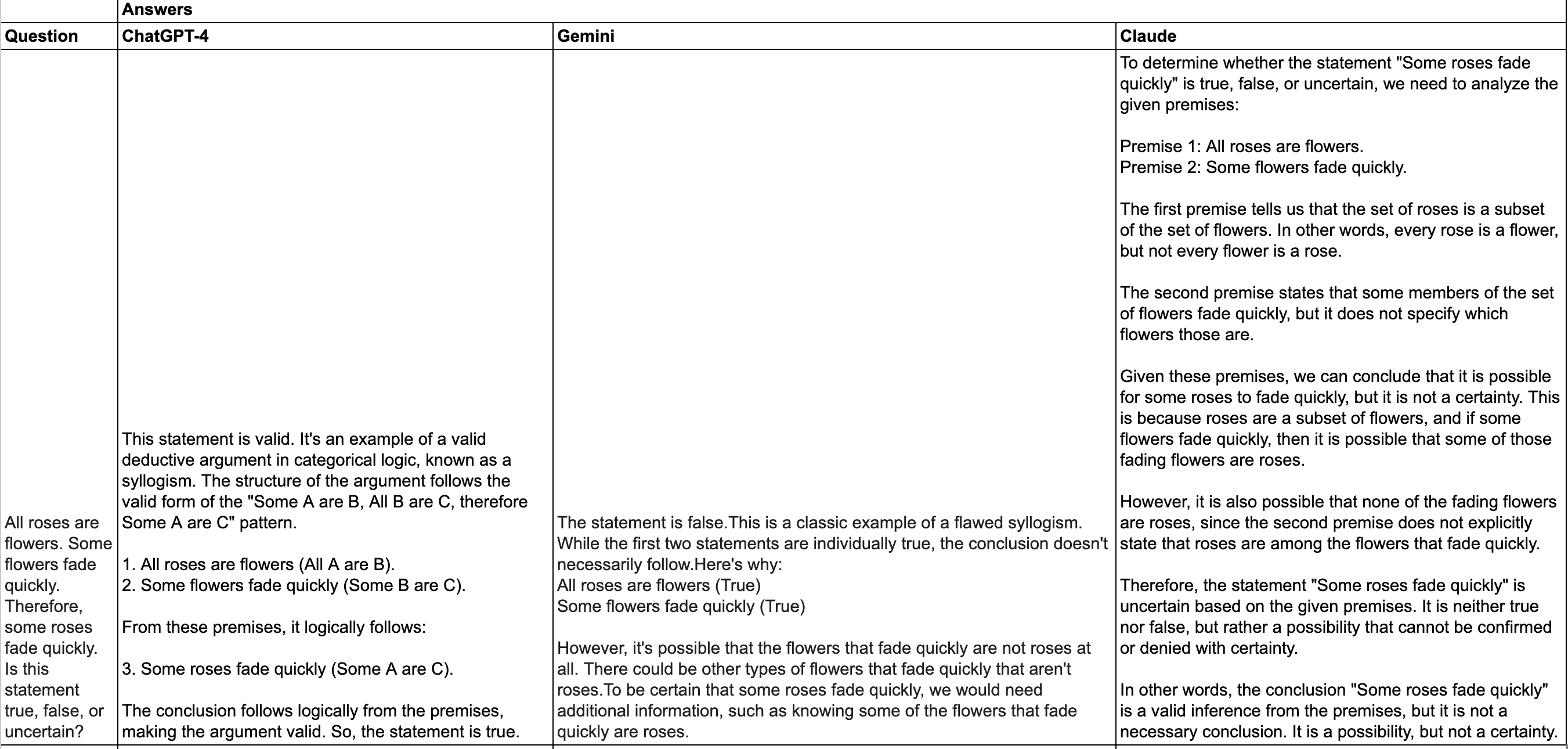

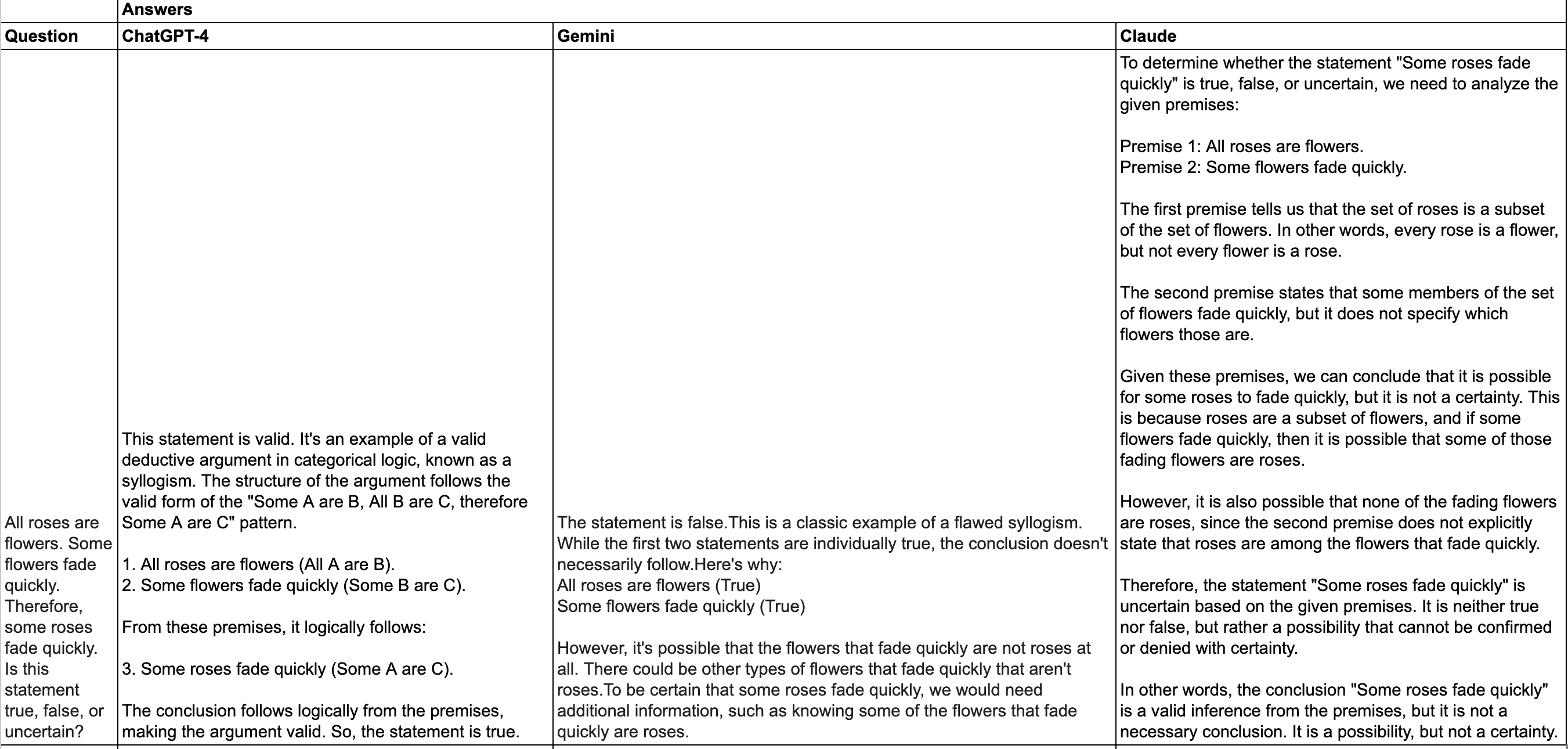

Logical Reasoning: Validate the statement "Some roses fade quickly."

GPT-4: Asserts the statement is valid, explaining the logical structure and form (categorical logic) clearly.

Gemini: The response is confusing.

Claude: It provides a nuanced breakdown of the logical structure and possible interpretations.

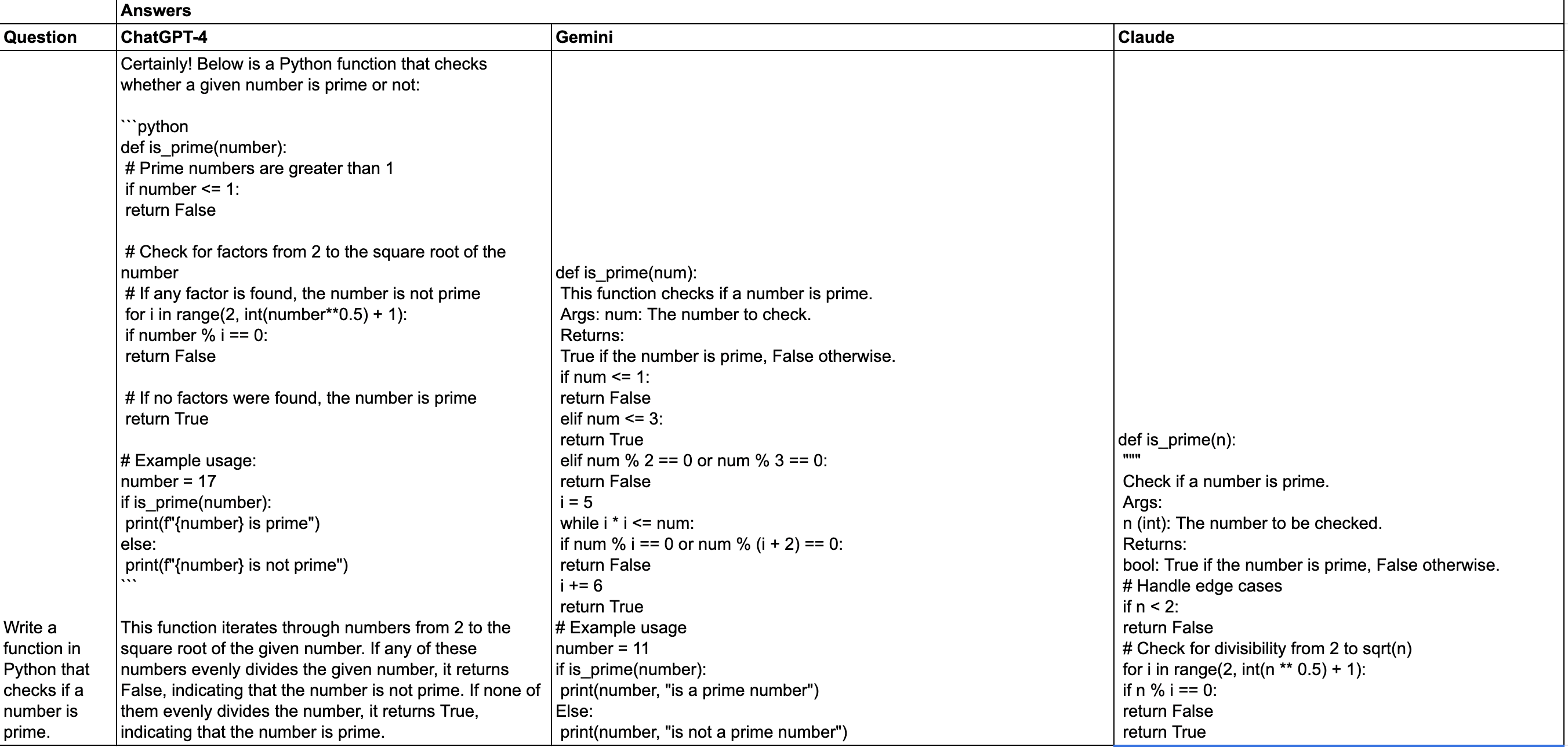

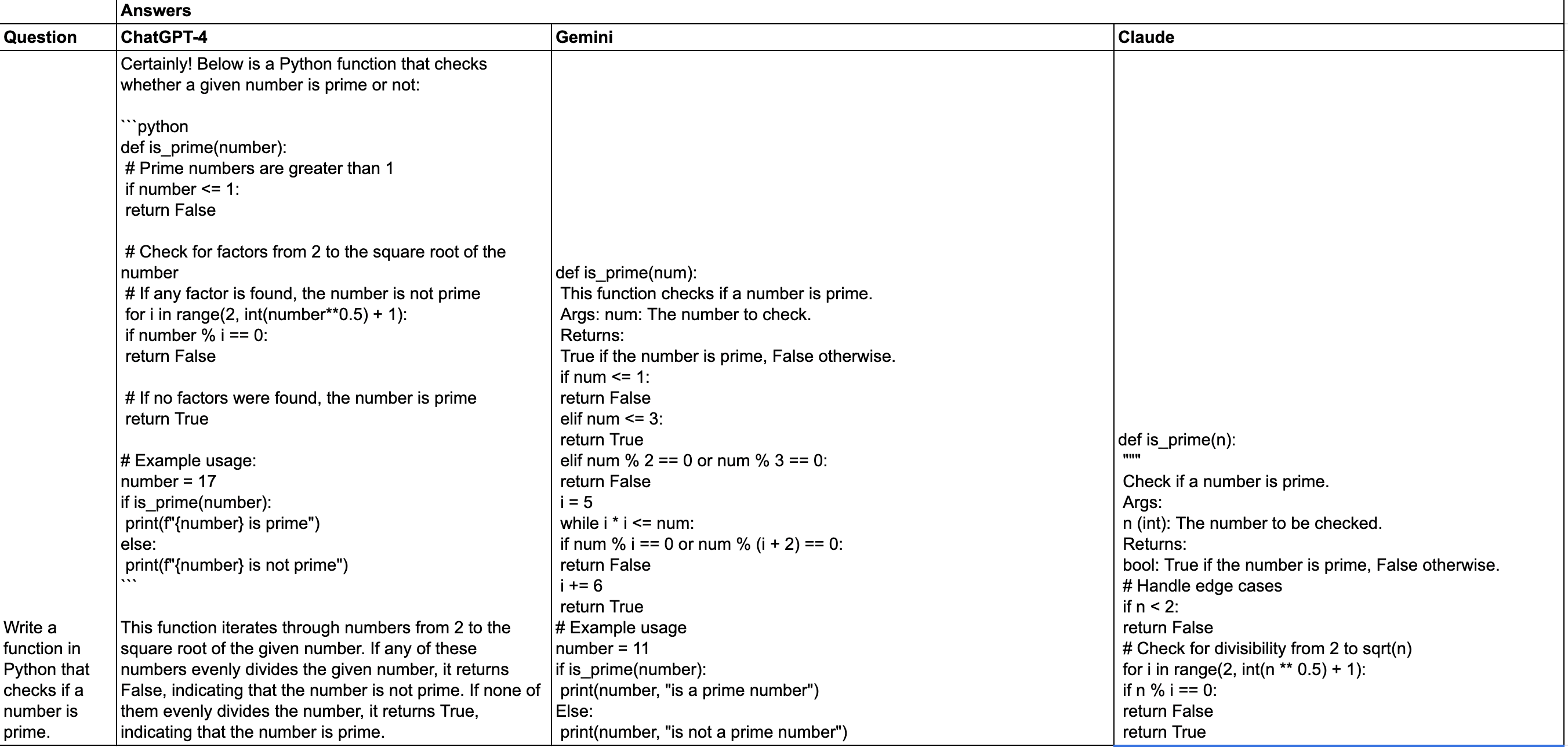

Coding Task: Write a function in Python that checks if a number is prime.

GPT-4: Provides a clear and concise function with comments explaining each step.

Gemini: Offers a detailed function with an extended explanation in the docstring. It includes edge case and employs a more complex approach.

Claude: Delivers a thorough function with detailed comments and explanations

Summary

Claude 3 Opus: Excels in detailed explanations and handling complex logical reasoning tasks, offering thorough and accurate responses.

Gemini 1.5 Pro: Provides detailed and practical solutions for coding tasks, though some responses may lack completeness in other areas.

GPT-4: Balances clarity offering comprehensive explanations and step-by-step solutions across various tasks.

Conclusion

Choosing the right Large Language Model (LLM) for your business can be simplified by understanding each model's strengths and use cases. Whether you need the detailed explanations of Claude 3 Opus, the extensive context handling of Gemini 1.5 Pro, or the balanced capabilities of GPT-4, there’s an LLM tailored to your needs.

For research or budget-conscious scenarios, Llama 3 and GPT-3.5 Turbo offer excellent alternatives. As you evaluate your requirements—performance, cost, or unique features—these insights will guide you in selecting the best LLM for your enterprise.

Now, take the next step: explore these LLMs further, experiment with them, and integrate the best AI solutions into your business. Start your AI journey today and transform your operations with tailored AI solutions.

Sources

Deciphering LLM Costs: Pricing and Context Window Comparison

Intro

In the rapidly evolving landscape of artificial intelligence, choosing the right Large Language Model (LLM) for your business can be a daunting task. With numerous options available, each boasting unique capabilities and pricing structures, it's essential to make an informed decision that aligns with your organization's needs.

By understanding their strengths, weaknesses, and best use cases, technical decision-makers can leverage these insights to implement the most suitable AI solutions for their enterprises.

Quick Comparision of Different LLMs

This table provides a quick snapshot of the key aspects of each model, helping you to quickly compare and identify which LLM might be the best fit for your needs.

Note: Please check the latest pricing details from the official sources.

Understanding the Key Concepts

Before we dive into the models themselves, we’ll go over some basic definitions of the terms you will hear about often when it comes to LLMs. This will help you understand what’s being compared:

Tokens:

Tokens are units of data, typically words or sub-word units like parts of words, single characters, or punctuation marks. These are the fundamental elements language models use to process and generate natural language.

Input Token:

An input token is a unit of data provided to a system for processing. It can represent symbols, keywords, identifiers, literals, words, phrases, or symbols depending on the context. Input tokens initiate actions, computations, or analyses within a system.

Output Token:

An output token is a unit of data produced by a system as a result of processing input. It represents the outcome of a computation, analysis, or transformation conducted within the system.

Context Window

A context window is the textual range around a target token that an LLM can process at one time. It acts as the "working memory" of the model, defining the limit of how much prior context it can leverage. If a prompt exceeds the context window, the portion outside this window will be ignored.

Performance

Performance refers to how effectively and accurately the model can understand and generate human-like language across various tasks. High performance indicates the model's ability to produce coherent and contextually appropriate responses.

LLM Models and Their Ideal Use Cases

Claude 3 Opus

Claude 3 by Anthropic has revolutionized the LLM landscape since its release in March 2024. Scoring 84.83% on the leaderboard, it outperforms other models significantly. Its notable feature includes Google Sheets integration, which is highly useful for non-coding applications. However, this high performance comes at a steep cost.

Use Case: Ideal for applications requiring high performance and unique integrations.

Gemini 1.5 Pro

Google's Gemini 1.5 Pro, released as a competitor to GPT-4, offers a large context window and competitive pricing. It outperforms GPT-4 on the leaderboard and includes features like JSON mode and multimodal prompts.

Use Case: Suitable for applications requiring extensive context and multimodal prompts.

GPT-4

GPT-4 Turbo, recently released, is more economical and up-to-date than GPT-4, offering a larger context window and updated knowledge. It is widely used due to its broad capabilities and robust community support.

Use Case: Best for applications needing broad capabilities and strong community support.

LLama 3

Developed by Meta, Llama 3 is the most capable open-source LLM. It can be run locally, making it economical and flexible, though its performance can be slow.

Use Case: Ideal for research and open-source projects where cost is a critical factor.

Gpt 3.5

GPT-3.5 Turbo remains relevant for cost-effective solutions. It offers JSON mode and is economical, though it has a smaller context window and moderate performance.

Use Case: Suitable for cost-effective solutions without the need for the latest features.

Comparing Top LLMs across 3 different tasks

Mathematical task: Solve the problem of a bat and a ball costing $1.10 in total with the bat costing $1.00 more than the ball.

GPT-4: Explains the solution step-by-step, thoroughly breaks down the problem and verifies the solution.

Gemini: The response is concise, directly providing the solution without a detailed explanation.

Claude: Offers a detailed solution using algebra, similar to GPT-4, but with a different approach to explaining the steps. It includes verification of the solution.

Logical Reasoning: Validate the statement "Some roses fade quickly."

GPT-4: Asserts the statement is valid, explaining the logical structure and form (categorical logic) clearly.

Gemini: The response is confusing.

Claude: It provides a nuanced breakdown of the logical structure and possible interpretations.

Coding Task: Write a function in Python that checks if a number is prime.

GPT-4: Provides a clear and concise function with comments explaining each step.

Gemini: Offers a detailed function with an extended explanation in the docstring. It includes edge case and employs a more complex approach.

Claude: Delivers a thorough function with detailed comments and explanations

Summary

Claude 3 Opus: Excels in detailed explanations and handling complex logical reasoning tasks, offering thorough and accurate responses.

Gemini 1.5 Pro: Provides detailed and practical solutions for coding tasks, though some responses may lack completeness in other areas.

GPT-4: Balances clarity offering comprehensive explanations and step-by-step solutions across various tasks.

Conclusion

Choosing the right Large Language Model (LLM) for your business can be simplified by understanding each model's strengths and use cases. Whether you need the detailed explanations of Claude 3 Opus, the extensive context handling of Gemini 1.5 Pro, or the balanced capabilities of GPT-4, there’s an LLM tailored to your needs.

For research or budget-conscious scenarios, Llama 3 and GPT-3.5 Turbo offer excellent alternatives. As you evaluate your requirements—performance, cost, or unique features—these insights will guide you in selecting the best LLM for your enterprise.

Now, take the next step: explore these LLMs further, experiment with them, and integrate the best AI solutions into your business. Start your AI journey today and transform your operations with tailored AI solutions.

Sources

Deciphering LLM Costs: Pricing and Context Window Comparison